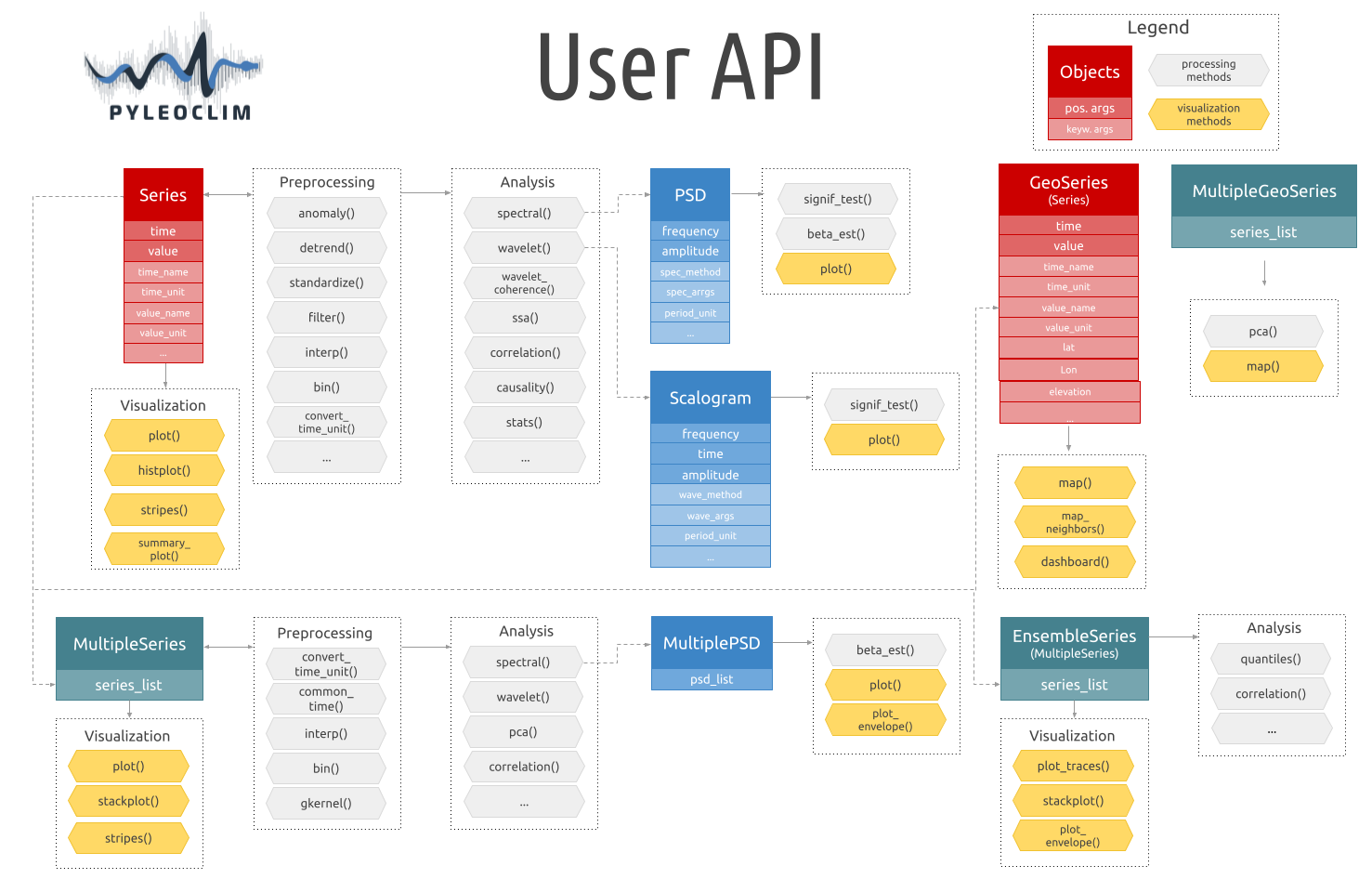

Pyleoclim User API

Pyleoclim, like a lot of other Python packages, follows an object-oriented design. It sounds fancy, but it really is quite simple. What this means for you is that we’ve gone through the trouble of coding up a lot of timeseries analysis methods that apply in various situations - so you don’t have to worry about that. These situations are described in classes, the beauty of which is called “inheritance” (see link above). Basically, it allows to define methods that will automatically apply to your dataset, as long as you put your data within one of those classes. A major advantage of object-oriented design is that you, the user, can harness the power of Pyleoclim methods in very few lines of code through the user API without ever having to get your hands dirty with our code (unless you want to, of course). The flipside is that any user would do well to understand Pyleoclim classes, what they are intended for, and what methods they support.

The following describes the various classes that undergird the Pyleoclim edifice.

Series (pyleoclim.Series)

- class pyleoclim.core.series.Series(time, value, time_unit=None, time_name=None, value_name=None, value_unit=None, label=None, importedFrom=None, archiveType=None, control_archiveType=False, log=None, keep_log=False, sort_ts='ascending', dropna=True, verbose=True, clean_ts=False, auto_time_params=None)[source]

The Series class describes the most basic objects in Pyleoclim. A Series is a simple dictionary that contains 3 things:

value, an array of real-valued numbers;

time, a coordinate axis at which those values were obtained ;

optionally, some metadata about both axes, like units, labels and origin.

How to create, manipulate and use such objects is described in PyleoTutorials.

- Parameters:

time (list or numpy.array) – time axis (prograde or retrograde)

value (list of numpy.array) – values of the dependent variable (y)

time_unit (string) – Units for the time vector (e.g., ‘ky BP’). Default is None. in which case ‘years CE’ are assumed

time_name (string) – Name of the time vector (e.g., ‘Time’,’Age’). Default is None. This is used to label the time axis on plots

value_name (string) – Name of the value vector (e.g., ‘temperature’) Default is None

value_unit (string) – Units for the value vector (e.g., ‘deg C’) Default is None

label (string) – Name of the time series (e.g., ‘NINO 3.4’) Default is None

log (dict) – Dictionary of tuples documentating the various transformations applied to the object

keep_log (bool) – Whether to keep a log of applied transformations. False by default

importedFrom (string) – source of the dataset. If it came from a LiPD file, this could be the datasetID property

archiveType (string) – climate archive, one of ‘Borehole’, ‘Coral’, ‘FluvialSediment’, ‘GlacierIce’, ‘GroundIce’, ‘LakeSediment’, ‘MarineSediment’, ‘Midden’, ‘MolluskShell’, ‘Peat’, ‘Sclerosponge’, ‘Shoreline’, ‘Speleothem’, ‘TerrestrialSediment’, ‘Wood’ Reference: https://lipdverse.org/vocabulary/archivetype/

control_archiveType ([True, False]) – Whether to standardize the name of the archiveType agains the vocabulary from: https://lipdverse.org/vocabulary/paleodata_proxy/. If set to True, will only allow for these terms and automatically convert known synonyms to the standardized name. Only standardized variable names will be automatically assigned a color scheme. Default is False.

dropna (bool) – Whether to drop NaNs from the series to prevent downstream functions from choking on them defaults to True

sort_ts (str) – Direction of sorting over the time coordinate; ‘ascending’ or ‘descending’ Defaults to ‘ascending’

verbose (bool) – If True, will print warning messages if there are any

clean_ts (boolean flag) – set to True to remove the NaNs and make time axis strictly prograde with duplicated timestamps reduced by averaging the values Default is None (marked for deprecation)

auto_time_params (bool,) – If True, uses tsbase.disambiguate_time_metadata to ensure that time_name and time_unit are usable by Pyleoclim. This may override the provided metadata. If False, the provided time_name and time_unit are used. This may break some functionalities (e.g. common_time and convert_time_unit), so use at your own risk. If not provided, code will set to True for internal consistency.

Examples

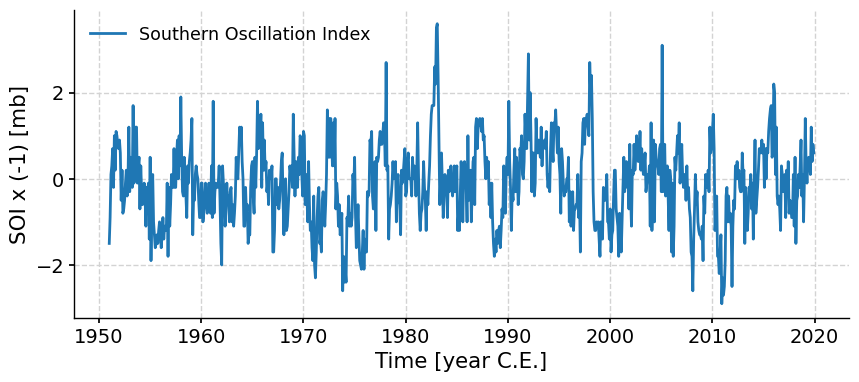

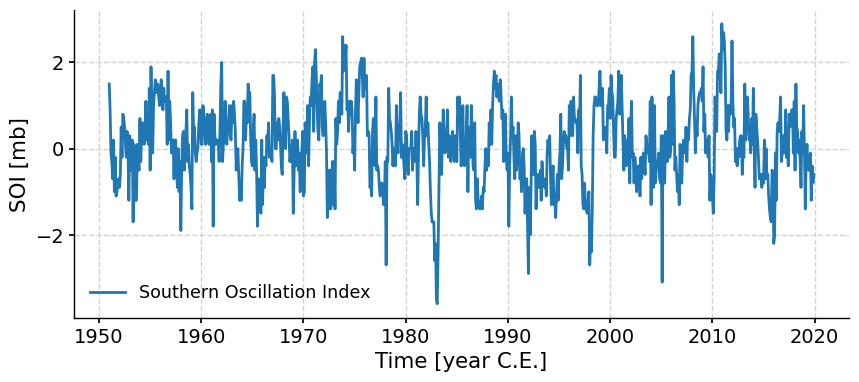

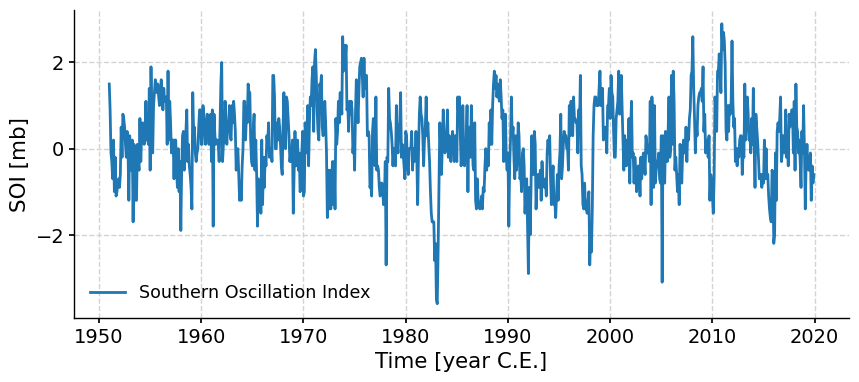

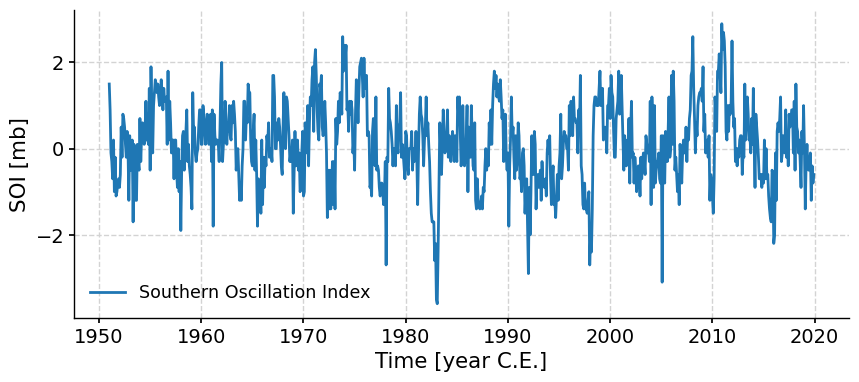

Import the Southern Oscillation Index (SOI) and display a quick synopsis:

import pyleoclim as pyleo soi = pyleo.utils.load_dataset('SOI') soi.view()

SOI [mb] Time [year C.E.] 1951.000000 1.5 1951.083333 0.9 1951.166667 -0.1 1951.250000 -0.3 1951.333333 -0.7 ... ... 2019.583333 -0.1 2019.666667 -1.2 2019.750000 -0.4 2019.833333 -0.8 2019.916667 -0.6 828 rows × 1 columns

- Attributes:

datetime_indexConvert time to pandas DatetimeIndex.

- metadata

Methods

bin([keep_log])Bin values in a time series

causality(target_series[, method, timespan, ...])Perform causality analysis with the target timeseries. Specifically, whether there is information in the target series that influenced the original series.

center([timespan, keep_log])Centers the series (i.e.

clean([verbose, keep_log])Clean up the timeseries by removing NaNs and sort with increasing time points

convert_time_unit([time_unit, keep_log])Convert the time units of the Series object

copy()Make a copy of the Series object

correlation(target_series[, alpha, ...])Estimates the correlation and its associated significance between two time series (not ncessarily IID).

detrend([method, keep_log, preserve_mean])Detrend Series object

equals(ts[, index_tol, value_tol])Test whether two objects contain the same elements (values and datetime_index) A printout is returned if metadata are different, but the statement is considered True as long as data match.

fill_na([timespan, dt, keep_log])Fill NaNs into the timespan

filter([cutoff_freq, cutoff_scale, method, ...])Filtering methods for Series objects using four possible methods:

flip([axis, keep_log])Flips the Series along one or both axes

from_csv(path)Read in Series object from CSV file.

from_json(path)Creates a pyleoclim.Series from a JSON file

gaussianize([keep_log])Gaussianizes the timeseries (i.e.

gkernel([step_style, keep_log, step_type])Coarse-grain a Series object via a Gaussian kernel.

histplot([figsize, title, savefig_settings, ...])Plot the distribution of the timeseries values

interp([method, keep_log])Interpolate a Series object onto a new time axis

is_evenly_spaced([tol])Check if the Series time axis is evenly-spaced, within tolerance

Initialization of plot labels based on Series metadata

outliers([method, remove, settings, ...])Remove outliers from timeseries data.

plot([figsize, marker, markersize, color, ...])Plot the timeseries

resample(rule[, keep_log])Run analogue to pandas.Series.resample.

Generate a resolution object

segment([factor, verbose])Gap detection

sel([value, time, tolerance])Slice Series based on 'value' or 'time'.

slice(timespan)Slicing the timeseries with a timespan (tuple or list)

sort([verbose, ascending, keep_log])Ensure timeseries is set to a monotonically increasing axis.

spectral([method, freq_method, freq_kwargs, ...])Perform spectral analysis on the timeseries

ssa([M, nMC, f, trunc, var_thresh, online])Singular Spectrum Analysis

standardize([keep_log, scale])Standardizes the series ((i.e.

stats()Compute basic statistics from a Series

stripes([figsize, cmap, ref_period, sat, ...])Represents the Series as an Ed Hawkins "stripes" pattern

summary_plot(psd, scalogram[, figsize, ...])Produce summary plot of timeseries.

surrogates([method, number, length, seed, ...])Generate surrogates of the Series object according to "method"

to_csv([metadata_header, path])Export Series to csv

to_json([path])Export the pyleoclim.Series object to a json file

to_pandas([paleo_style])Export to pandas Series

view()Generates a DataFrame version of the Series object, suitable for viewing in a Jupyter Notebook

wavelet([method, settings, freq_method, ...])Perform wavelet analysis on a timeseries

wavelet_coherence(target_series[, method, ...])Performs wavelet coherence analysis with the target timeseries

from_pandas

pandas_method

- bin(keep_log=False, **kwargs)[source]

Bin values in a time series

- Parameters:

keep_log (Boolean) – if True, adds this step and its parameters to the series log.

kwargs – Arguments for binning function. See pyleoclim.utils.tsutils.bin for details

- Returns:

new – An binned Series object

- Return type:

See also

pyleoclim.utils.tsutils.binbin the series values into evenly-spaced time bins

- causality(target_series, method='liang', timespan=None, settings=None, common_time_kwargs=None)[source]

- Perform causality analysis with the target timeseries. Specifically, whether there is information in the target series that influenced the original series.

If the two series have different time axes, they are first placed on a common timescale (in ascending order).

- Parameters:

target_series (Series) – A pyleoclim Series object on which to compute causality

method ({'liang', 'granger'}) – The causality method to use.

timespan (tuple) – The time interval over which to perform the calculation

settings (dict) – Parameters associated with the causality methods. Note that each method has different parameters. See individual methods for details

common_time_kwargs (dict) – Parameters for the method MultipleSeries.common_time(). Will use interpolation by default.

- Returns:

res – Dictionary containing the results of the the causality analysis. See indivudal methods for details

- Return type:

dict

See also

pyleoclim.utils.causality.liang_causalityLiang causality

pyleoclim.utils.causality.granger_causalityGranger causality

Examples

Liang causality

import pyleoclim as pyleo ts_nino=pyleo.utils.load_dataset('NINO3') ts_air=pyleo.utils.load_dataset('AIR')

We use the specific params below to lighten computations; you may drop settings for real work

liang_N2A = ts_air.causality(ts_nino, settings={'nsim': 20, 'signif_test': 'isopersist'}) print(liang_N2A) liang_A2N = ts_nino.causality(ts_air, settings={'nsim': 20, 'signif_test': 'isopersist'}) print(liang_A2N) liang_N2A['T21']/liang_A2N['T21']

{'T21': 0.01644548028647295, 'tau21': 0.011968992003953967, 'Z': 1.3740071244963796, 'dH1_star': -0.6359251528278479, 'dH1_noise': 0.3521058551681981, 'signif_qs': [0.005, 0.025, 0.05, 0.95, 0.975, 0.995], 'T21_noise': array([-9.84643639e-05, -9.84643639e-05, -6.55363879e-05, 1.78004343e-03, 2.08564347e-03, 2.08564347e-03]), 'tau21_noise': array([-7.07610654e-05, -7.07610654e-05, -4.73114672e-05, 1.30222211e-03, 1.53734026e-03, 1.53734026e-03])}{'T21': 0.005840218794917537, 'tau21': 0.047318261599206914, 'Z': 0.12342420447279118, 'dH1_star': -0.5094709112672596, 'dH1_noise': 0.4432108271335334, 'signif_qs': [0.005, 0.025, 0.05, 0.95, 0.975, 0.995], 'T21_noise': array([-0.00099452, -0.00099452, -0.00093254, 0.0001269 , 0.0001331 , 0.0001331 ]), 'tau21_noise': array([-0.01029959, -0.01029959, -0.00879044, 0.00105281, 0.00107306, 0.00107306])}2.815901400951736

Both information flows (T21) are positive, but the flow from NINO3 to AIR is about 3x as large as the other way around, suggesting that NINO3 influences AIR much more than the other way around, which conforms to physical intuition.

To implement Granger causality, simply specfiy the method:

granger_A2N = ts_nino.causality(ts_air, method='granger') granger_N2A = ts_air.causality(ts_nino, method='granger')

Granger Causality number of lags (no zero) 1 ssr based F test: F=20.8492 , p=0.0000 , df_denom=1592, df_num=1 ssr based chi2 test: chi2=20.8885 , p=0.0000 , df=1 likelihood ratio test: chi2=20.7529 , p=0.0000 , df=1 parameter F test: F=20.8492 , p=0.0000 , df_denom=1592, df_num=1 Granger Causality number of lags (no zero) 1 ssr based F test: F=18.6927 , p=0.0000 , df_denom=1592, df_num=1 ssr based chi2 test: chi2=18.7280 , p=0.0000 , df=1 likelihood ratio test: chi2=18.6189 , p=0.0000 , df=1 parameter F test: F=18.6927 , p=0.0000 , df_denom=1592, df_num=1

Note that the output is fundamentally different for the two methods. Granger causality cannot discriminate between NINO3 -> AIR or AIR -> NINO3, in this case. This is not unusual, and one reason why it is no longer in wide use.

- center(timespan=None, keep_log=False)[source]

Centers the series (i.e. renove its estimated mean)

- Parameters:

timespan (tuple or list) – The timespan over which the mean must be estimated. In the form [a, b], where a, b are two points along the series’ time axis.

keep_log (Boolean) – if True, adds the previous mean and method parameters to the series log.

- Returns:

new – The centered series object

- Return type:

- clean(verbose=False, keep_log=False)[source]

Clean up the timeseries by removing NaNs and sort with increasing time points

- Parameters:

verbose (bool) – If True, will print warning messages if there is any

keep_log (Boolean) – if True, adds this step and its parameters to the series log.

- Returns:

new – Series object with removed NaNs and sorting

- Return type:

- convert_time_unit(time_unit='ky BP', keep_log=False)[source]

Convert the time units of the Series object

- Parameters:

time_unit (str) –

the target time unit, possible input: {

’year’, ‘years’, ‘yr’, ‘yrs’, ‘CE’, ‘AD’, ‘y BP’, ‘yr BP’, ‘yrs BP’, ‘year BP’, ‘years BP’, ‘ky BP’, ‘kyr BP’, ‘kyrs BP’, ‘ka BP’, ‘ka’, ‘my BP’, ‘myr BP’, ‘myrs BP’, ‘ma BP’, ‘ma’,

}

keep_log (Boolean) – if True, adds this step and its parameter to the series log.

Examples

ts = pyleo.utils.load_dataset('SOI') tsBP = ts.convert_time_unit(time_unit='yrs BP') print('Original timeseries:') print('time unit:', ts.time_unit) print('time:', ts.time[:10]) print() print('Converted timeseries:') print('time unit:', tsBP.time_unit) print('time:', tsBP.time[:10])

Original timeseries: time unit: year C.E. time: [1951. 1951.083333 1951.166667 1951.25 1951.333333 1951.416667 1951.5 1951.583333 1951.666667 1951.75 ] Converted timeseries: time unit: yrs BP time: [-69.91471656 -69.83138256 -69.74804957 -69.66471654 -69.58138257 -69.49804955 -69.41471656 -69.33138255 -69.24804956 -69.16471654]

- copy()[source]

Make a copy of the Series object

- Returns:

Series – A copy of the Series object

- Return type:

- correlation(target_series, alpha=0.05, statistic='pearsonr', method='phaseran', timespan=None, settings=None, common_time_kwargs=None, seed=None, mute_pbar=False)[source]

Estimates the correlation and its associated significance between two time series (not ncessarily IID).

The significance of the correlation is assessed using one of the following methods:

‘ttest’: T-test adjusted for effective sample size.

‘isopersistent’: AR(1) modeling of x and y.

‘isospectral’: phase randomization of original inputs. (default)

The T-test is a parametric test, hence computationally cheap, but can only be performed in ideal circumstances. The others are non-parametric, but their computational requirements scale with the number of simulations.

The choise of significance test and associated number of Monte-Carlo simulations are passed through the settings parameter.

- Parameters:

target_series (Series) – A pyleoclim Series object

alpha (float) – The significance level (default: 0.05)

statistic (str) –

- statistic being evaluated. Can use any of the SciPy-supported ones:

https://docs.scipy.org/doc/scipy/reference/stats.html#association-correlation-tests Currently supported: [‘pearsonr’,’spearmanr’,’pointbiserialr’,’kendalltau’,’weightedtau’]

Default: ‘pearsonr’.

method (str, {'ttest','built-in','ar1sim','phaseran'}) – method for significance testing. Default is ‘phaseran’ ‘ttest’ implements the T-test with degrees of freedom adjusted for autocorrelation, as done in [1] ‘built-in’ uses the p-value that ships with the statistic. The old options ‘isopersistent’ and ‘isospectral’ still work, but trigger a deprecation warning. Note that ‘weightedtau’ does not have a known distribution, so the ‘built-in’ method returns an error in that case.

timespan (tuple) – The time interval over which to perform the calculation

- settingsdict

Parameters for the correlation function, including:

- nsimint

the number of simulations (default: 1000)

- methodstr, {‘ttest’,’ar1sim’,’phaseran’ (default)}

method for significance testing

- surr_settingsdict

Parameters for surrogate generator. See individual methods for details.

- common_time_kwargsdict

Parameters for the method MultipleSeries.common_time(). Will use interpolation by default.

- seedfloat or int

random seed for isopersistent and isospectral methods

- mute_pbarbool, optional

Mute the progress bar. The default is False.

- Returns:

corr – the result object, containing

- rfloat

correlation coefficient

- pfloat

the p-value

- signifbool

true if significant; false otherwise Note that signif = True if and only if p <= alpha.

- alphafloat

the significance level

- Return type:

pyleoclim.Corr

See also

pyleoclim.utils.correlation.corr_sigCorrelation function (marked for deprecation)

pyleoclim.utils.correlation.associationSciPy measures of association between variables

pyleoclim.series.surrogatesparametric and non-parametric surrogates of any Series object

pyleoclim.multipleseries.common_timeAligning time axes

References

[1] Hu, J., J. Emile-Geay, and J. Partin (2017), Correlation-based interpretations of paleoclimate data – where statistics meet past climates, Earth and Planetary Science Letters, 459, 362–371, doi:10.1016/j.epsl.2016.11.048.

Examples

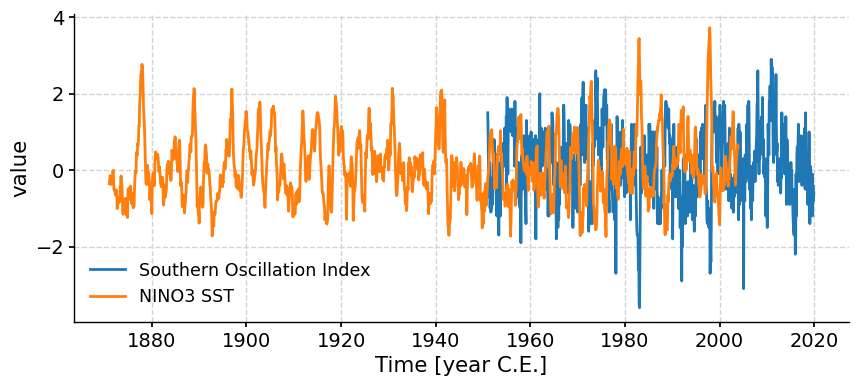

Correlation between the Nino3.4 index and the Deasonalized All Indian Rainfall Index

import pyleoclim as pyleo ts_air = pyleo.utils.load_dataset('AIR') ts_nino = pyleo.utils.load_dataset('NINO3') # with `nsim=20` and default `method='phaseran'` # set an arbitrary random seed to fix the result corr_res = ts_nino.correlation(ts_air, settings={'nsim': 20}, seed=2333) print(corr_res) # changing the statistic corr_res = ts_nino.correlation(ts_air, statistic='kendalltau') print(corr_res) # using a simple t-test with DOFs adjusted for autocorrelation # set an arbitrary random seed to fix the result corr_res = ts_nino.correlation(ts_air, method='ttest') print(corr_res) # using "isopersistent" surrogates (AR(1) simulation) # set an arbitrary random seed to fix the result corr_res = ts_nino.correlation(ts_air, method = 'ar1sim', settings={'nsim': 20}, seed=2333) print(corr_res)

correlation p-value signif. (α: 0.05) ------------- --------- ------------------- -0.152394 < 1e-6 1correlation p-value signif. (α: 0.05) ------------- --------- ------------------- -0.0626788 < 1e-2 1

correlation p-value signif. (α: 0.05) ------------- --------- ------------------- -0.152394 < 1e-7 Truecorrelation p-value signif. (α: 0.05) ------------- --------- ------------------- -0.152394 < 1e-6 1

- property datetime_index

Convert time to pandas DatetimeIndex.

Note: conversion will happen using time_unit, and will assume:

the number of seconds per year is calculated using UDUNITS, see http://cfconventions.org/cf-conventions/cf-conventions#time-coordinate

time refers to the Gregorian calendar. If using a different calendar, then please make sure to do any conversions before hand.

- detrend(method='emd', keep_log=False, preserve_mean=False, **kwargs)[source]

Detrend Series object

- Parameters:

method (str, optional) –

The method for detrending. The default is ‘emd’. Options include:

”linear”: the result of a n ordinary least-squares stright line fit to y is subtracted.

”constant”: only the mean of data is subtracted.

”savitzky-golay”, y is filtered using the Savitzky-Golay filters and the resulting filtered series is subtracted from y.

”emd” (default): Empirical mode decomposition. The last mode is assumed to be the trend and removed from the series

keep_log (boolean) – if True, adds the removed trend and method parameters to the series log.

preserve_mean (boolean) – if True, ensures that the mean of the series is preserved despite the detrending

kwargs (dict) – Relevant arguments for each of the methods.

- Returns:

new – Detrended Series object in “value”, with new field “trend” added

- Return type:

See also

pyleoclim.utils.tsutils.detrenddetrending wrapper functions

Examples

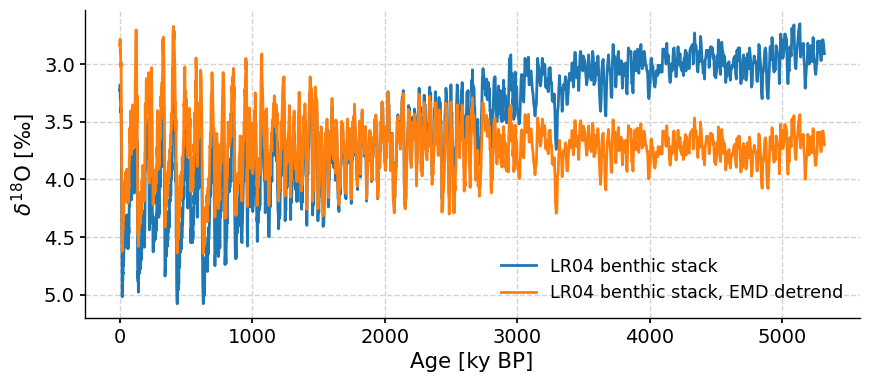

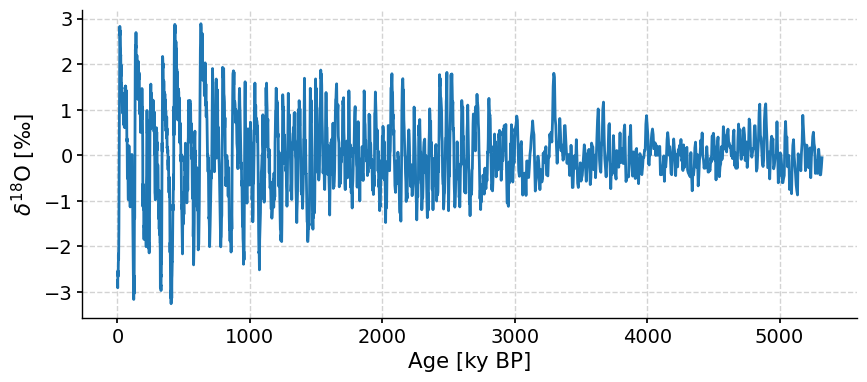

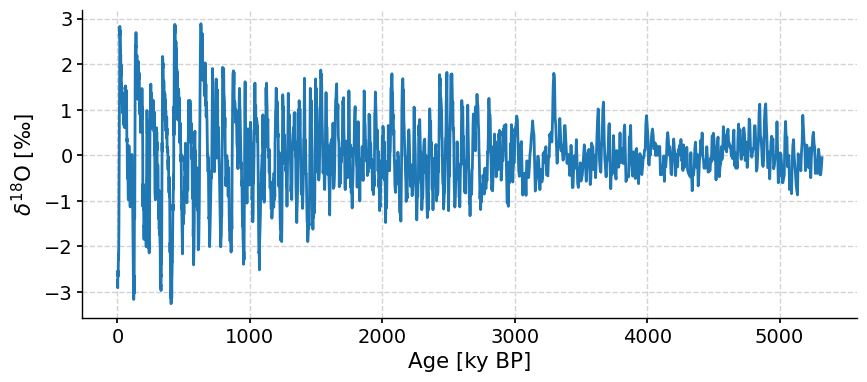

lr04 = pyleo.utils.load_dataset('LR04') fig, ax = lr04.plot(invert_yaxis=True) ts_emd = lr04.detrend(method='emd',preserve_mean=True) ts_emd.plot(label=lr04.label+', EMD detrend',ax=ax)

<Axes: xlabel='Age [ky BP]', ylabel='$\\delta^{18} \\mathrm{O}$ [‰]'>

- equals(ts, index_tol=5, value_tol=1e-05)[source]

Test whether two objects contain the same elements (values and datetime_index) A printout is returned if metadata are different, but the statement is considered True as long as data match.

- Parameters:

ts (Series object) – The target series for the comparison

index_tol (int, default 5) – tolerance on difference in datetime indices (in dtype units, which are seconds by default)

value_tol (float, default 1e-5) – tolerance on difference in values (in %)

- Returns:

same_data (bool) – Truth value of the proposition “the two series have the same data”.

same_metadata (bool) – Truth value of the proposition “the two series have the same metadata”.

Examples

import pyleoclim as pyleo soi = pyleo.utils.load_dataset('SOI') NINO3 = pyleo.utils.load_dataset('NINO3') soi.equals(NINO3)

The two series have different lengths, left: 828 vs right: 1596 Metadata are different: value_unit property -- left: mb, right: $^{\circ}$C value_name property -- left: SOI, right: NINO3 label property -- left: Southern Oscillation Index, right: NINO3 SST(False, False)

- fill_na(timespan=None, dt=1, keep_log=False)[source]

Fill NaNs into the timespan

- Parameters:

timespan (tuple or list) – The list of time points for slicing, whose length must be 2. For example, if timespan = [a, b], then the sliced output includes one segment [a, b]. If None, will use the start point and end point of the original timeseries

dt (float) – The time spacing to fill the NaNs; default is 1.

keep_log (Boolean) – if True, adds this step and its parameters to the series log.

- Returns:

new – The sliced Series object.

- Return type:

- filter(cutoff_freq=None, cutoff_scale=None, method='butterworth', keep_log=False, **kwargs)[source]

- Filtering methods for Series objects using four possible methods:

By default, this method implements a lowpass filter, though it can easily be turned into a bandpass or high-pass filter (see examples below).

- Parameters:

method (str, {'savitzky-golay', 'butterworth', 'firwin', 'lanczos'}) – the filtering method - ‘butterworth’: a Butterworth filter (default = 3rd order) - ‘savitzky-golay’: Savitzky-Golay filter - ‘firwin’: finite impulse response filter design using the window method, with default window as Hamming - ‘lanczos’: Lanczos zero-phase filter

cutoff_freq (float or list) – The cutoff frequency only works with the Butterworth method. If a float, it is interpreted as a low-frequency cutoff (lowpass). If a list, it is interpreted as a frequency band (f1, f2), with f1 < f2 (bandpass). Note that only the Butterworth option (default) currently supports bandpass filtering.

cutoff_scale (float or list) – cutoff_freq = 1 / cutoff_scale The cutoff scale only works with the Butterworth method and when cutoff_freq is None. If a float, it is interpreted as a low-frequency (high-scale) cutoff (lowpass). If a list, it is interpreted as a frequency band (f1, f2), with f1 < f2 (bandpass).

keep_log (Boolean) – if True, adds this step and its parameters to the series log.

kwargs (dict) – a dictionary of the keyword arguments for the filtering method, see pyleoclim.utils.filter.savitzky_golay, pyleoclim.utils.filter.butterworth, pyleoclim.utils.filter.lanczos and pyleoclim.utils.filter.firwin for the details

- Returns:

new

- Return type:

See also

pyleoclim.utils.filter.butterworthButterworth method

pyleoclim.utils.filter.savitzky_golaySavitzky-Golay method

pyleoclim.utils.filter.firwinFIR filter design using the window method

pyleoclim.utils.filter.lanczoslowpass filter via Lanczos resampling

Examples

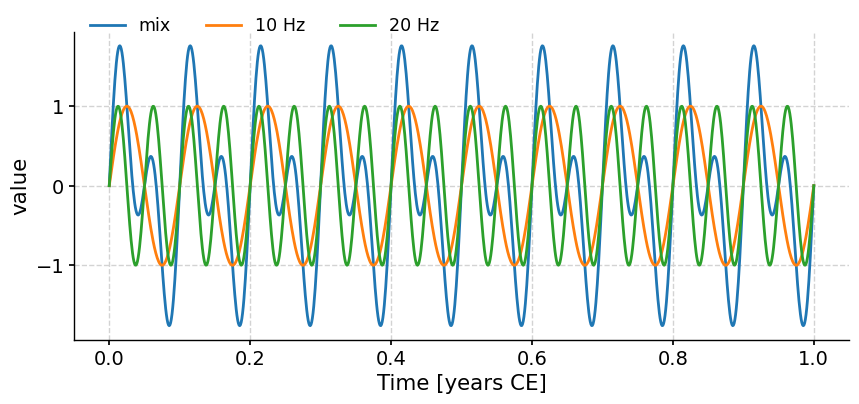

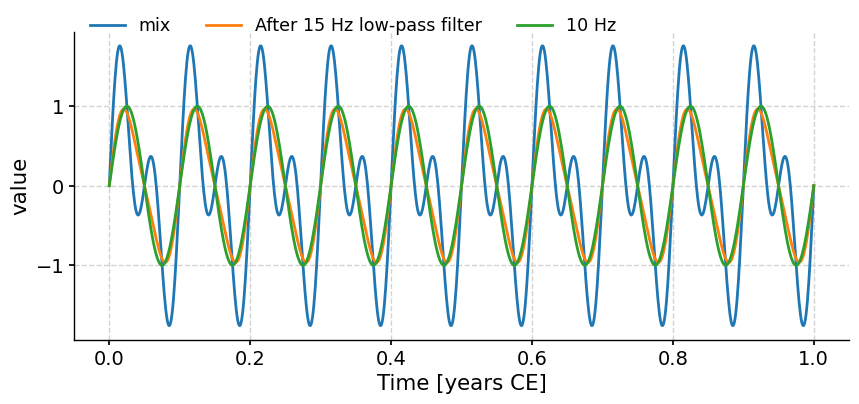

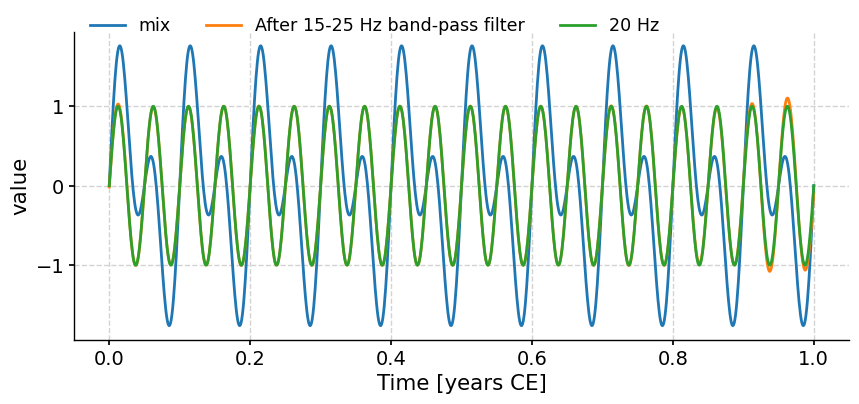

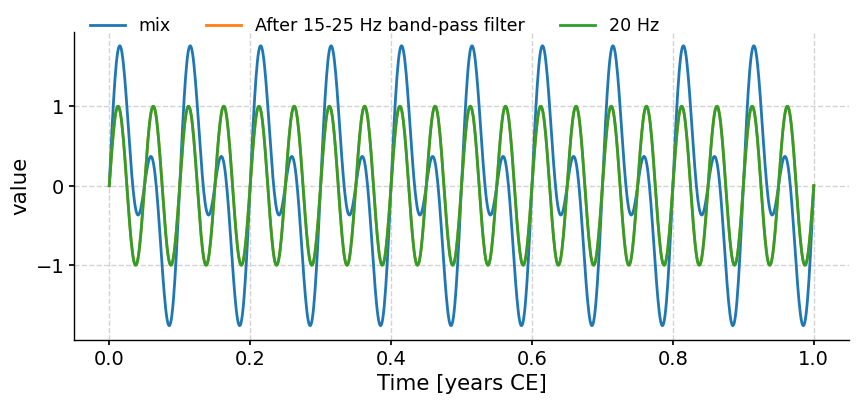

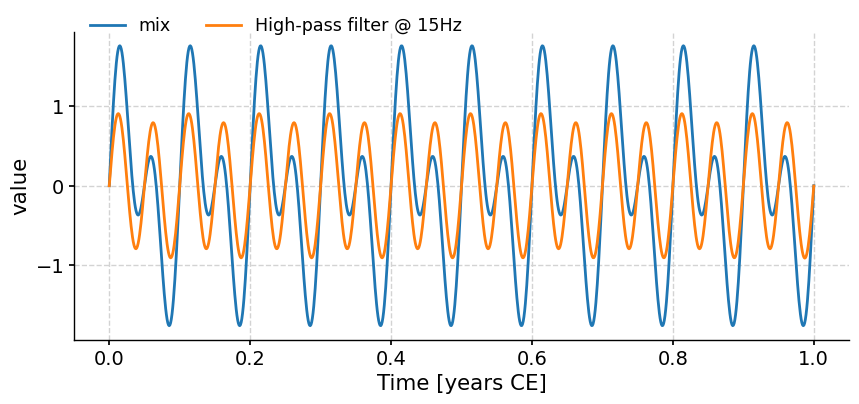

In the example below, we generate a signal as the sum of two signals with frequency 10 Hz and 20 Hz, respectively. Then we apply a low-pass filter with a cutoff frequency at 15 Hz, and compare the output to the signal of 10 Hz. After that, we apply a band-pass filter with the band 15-25 Hz, and compare the outcome to the signal of 20 Hz.

Generating the test data

import pyleoclim as pyleo import numpy as np t = np.linspace(0, 1, 1000) sig1 = np.sin(2*np.pi*10*t) sig2 = np.sin(2*np.pi*20*t) sig = sig1 + sig2 ts1 = pyleo.Series(time=t, value=sig1) ts2 = pyleo.Series(time=t, value=sig2) ts = pyleo.Series(time=t, value=sig) fig, ax = ts.plot(label='mix') ts1.plot(ax=ax, label='10 Hz') ts2.plot(ax=ax, label='20 Hz') ax.legend(loc='upper left', bbox_to_anchor=(0, 1.1), ncol=3)

Time axis values sorted in ascending order Time axis values sorted in ascending order Time axis values sorted in ascending order

<matplotlib.legend.Legend at 0x7f278cb4eb50>

Applying a low-pass filter

fig, ax = ts.plot(label='mix') ts.filter(cutoff_freq=15).plot(ax=ax, label='After 15 Hz low-pass filter') ts1.plot(ax=ax, label='10 Hz') ax.legend(loc='upper left', bbox_to_anchor=(0, 1.1), ncol=3)

<matplotlib.legend.Legend at 0x7f27880b7dd0>

Applying a band-pass filter

fig, ax = ts.plot(label='mix') ts.filter(cutoff_freq=[15, 25]).plot(ax=ax, label='After 15-25 Hz band-pass filter') ts2.plot(ax=ax, label='20 Hz') ax.legend(loc='upper left', bbox_to_anchor=(0, 1.1), ncol=3)

<matplotlib.legend.Legend at 0x7f278276cad0>

Above is using the default Butterworth filtering. To use FIR filtering with a window like Hanning is also simple:

fig, ax = ts.plot(label='mix') ts.filter(cutoff_freq=[15, 25], method='firwin', window='hanning').plot(ax=ax, label='After 15-25 Hz band-pass filter') ts2.plot(ax=ax, label='20 Hz') ax.legend(loc='upper left', bbox_to_anchor=(0, 1.1), ncol=3)

<matplotlib.legend.Legend at 0x7f2788151e50>

Applying a high-pass filter

fig, ax = ts.plot(label='mix') ts_low = ts.filter(cutoff_freq=15) ts_high = ts.copy() ts_high.value = ts.value - ts_low.value # subtract low-pass filtered series from original one ts_high.plot(label='High-pass filter @ 15Hz',ax=ax) ax.legend(loc='upper left', bbox_to_anchor=(0, 1.1), ncol=3)

<matplotlib.legend.Legend at 0x7f2782638c50>

- flip(axis='value', keep_log=False)[source]

Flips the Series along one or both axes

- Parameters:

axis (str, optional) – The axis along which the Series will be flipped. The default is ‘value’. Other acceptable options are ‘time’ or ‘both’. TODO: enable time flipping after paleopandas is released

keep_log (Boolean) – if True, adds this transformation to the series log.

- Returns:

new – The flipped series object

- Return type:

Examples

import pyleoclim as pyleo ts = pyleo.utils.load_dataset('SOI') tsf = ts.flip(keep_log=True) fig, ax = tsf.plot() tsf.log

({0: 'flip', 'applied': True, 'axis': 'value'},)

- classmethod from_csv(path)[source]

Read in Series object from CSV file. Expects a metadata header dealineated by ‘###’ lines, as written by the Series.to_csv() method.

- Parameters:

filename (str) – name of the file, e.g. ‘myrecord.csv’

path (str) – directory of the file. Default: current directory, ‘.’

- Returns:

pyleoclim Series object containing data and metadata.

- Return type:

See also

pyleoclim.Series.to_csv

- classmethod from_json(path)[source]

Creates a pyleoclim.Series from a JSON file

The keys in the JSON file must correspond to the parameter associated with a Series object

- Parameters:

path (str) – Path to the JSON file

- Returns:

ts – A Pyleoclim Series object.

- Return type:

- gaussianize(keep_log=False)[source]

Gaussianizes the timeseries (i.e. maps its values to a standard normal)

- Returns:

new (Series) – The Gaussianized series object

keep_log (Boolean) – if True, adds this transformation to the series log.

References

Emile-Geay, J., and M. Tingley (2016), Inferring climate variability from nonlinear proxies: application to palaeo-enso studies, Climate of the Past, 12 (1), 31–50, doi:10.5194/cp- 12-31-2016.

- gkernel(step_style='max', keep_log=False, step_type=None, **kwargs)[source]

Coarse-grain a Series object via a Gaussian kernel.

Like .bin() this technique is conservative and uses the max space between points as the default spacing. Unlike .bin(), gkernel() uses a gaussian kernel to calculate the weighted average of the time series over these intervals.

Note that if the series being examined has very low resolution sections with few points, you may need to tune the parameter for the kernel e-folding scale (h).

- Parameters:

step_style (str) – type of timestep: ‘mean’, ‘median’, or ‘max’ of the time increments

keep_log (Boolean) – if True, adds the step type and its keyword arguments to the series log.

kwargs – Arguments for kernel function. See pyleoclim.utils.tsutils.gkernel for details

- Returns:

new – The coarse-grained Series object

- Return type:

See also

pyleoclim.utils.tsutils.gkernelapplication of a Gaussian kernel

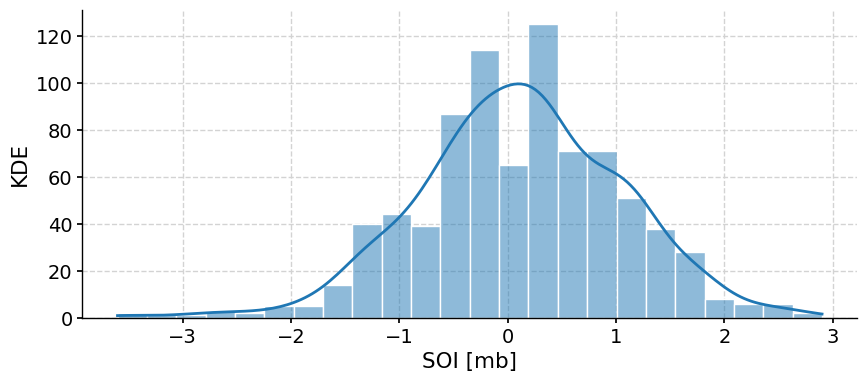

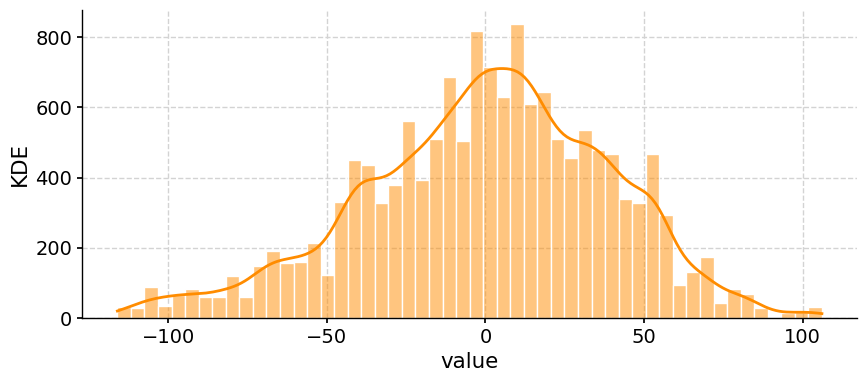

- histplot(figsize=[10, 4], title=None, savefig_settings=None, ax=None, ylabel='KDE', vertical=False, edgecolor='w', **plot_kwargs)[source]

Plot the distribution of the timeseries values

- Parameters:

figsize (list) – a list of two integers indicating the figure size

title (str) – the title for the figure

savefig_settings (dict) –

- the dictionary of arguments for plt.savefig(); some notes below:

”path” must be specified; it can be any existed or non-existed path, with or without a suffix; if the suffix is not given in “path”, it will follow “format”

”format” can be one of {“pdf”, “eps”, “png”, “ps”}

ax (matplotlib.axis, optional) – A matplotlib axis

ylabel (str) – Label for the count axis

vertical ({True,False}) – Whether to flip the plot vertically

edgecolor (matplotlib.color) – The color of the edges of the bar

plot_kwargs (dict) – Plotting arguments for seaborn histplot: https://seaborn.pydata.org/generated/seaborn.histplot.html

See also

pyleoclim.utils.plotting.savefigsaving figure in Pyleoclim

Examples

Distribution of the SOI record

import pyleoclim as pyleo ts = pyleo.utils.load_dataset('SOI') fig, ax = ts.plot() fig, ax = ts.histplot()

- interp(method='linear', keep_log=False, **kwargs)[source]

Interpolate a Series object onto a new time axis

- Parameters:

method ({‘linear’, ‘nearest’, ‘zero’, ‘slinear’, ‘quadratic’, ‘cubic’, ‘previous’, ‘next’}) – where ‘zero’, ‘slinear’, ‘quadratic’ and ‘cubic’ refer to a spline interpolation of zeroth, first, second or third order; ‘previous’ and ‘next’ simply return the previous or next value of the point) or as an integer specifying the order of the spline interpolator to use. Default is ‘linear’.

keep_log (Boolean) – if True, adds the method name and its parameters to the series log.

kwargs – Arguments specific to each interpolation function. See pyleoclim.utils.tsutils.interp for details

- Returns:

new – An interpolated Series object

- Return type:

See also

pyleoclim.utils.tsutils.interpinterpolation function

- is_evenly_spaced(tol=0.001)[source]

Check if the Series time axis is evenly-spaced, within tolerance

- Parameters:

tol (float) – tolerance. If time increments are all within tolerance, the series is declared evenly-spaced. default = 1e-3

- Returns:

res

- Return type:

bool

- make_labels()[source]

Initialization of plot labels based on Series metadata

- Returns:

time_header (str) – Label for the time axis

value_header (str) – Label for the value axis

- outliers(method='kmeans', remove=True, settings=None, fig_outliers=True, figsize_outliers=[10, 4], plotoutliers_kwargs=None, savefigoutliers_settings=None, fig_clusters=True, figsize_clusters=[10, 4], plotclusters_kwargs=None, savefigclusters_settings=None, keep_log=False)[source]

Remove outliers from timeseries data. The method employs clustering to identify clusters in the data, using the k-means and DBSCAN algorithms from scikit-learn. Points falling a certain distance from the cluster (either away from the centroid for k-means or in a area of low density for DBSCAN) are considered outliers. The silhouette score is used to optimize parameter values.

A tutorial explaining how to use this method and set the parameters is available at https://github.com/LinkedEarth/PyleoTutorials/blob/main/notebooks/L2_outliers_detection.ipynb.

- Parameters:

method (str, {'kmeans','DBSCAN'}, optional) – The clustering method to use. The default is ‘kmeans’.

remove (bool, optional) – If True, removes the outliers. The default is True.

settings (dict, optional) – Specific arguments for the clustering functions. The default is None.

fig_outliers (bool, optional) – Whether to display the timeseries showing the outliers. The default is True.

figsize_outliers (list, optional) – The dimensions of the outliers figure. The default is [10,4].

plotoutliers_kwargs (dict, optional) – Arguments for the plot displaying the outliers. The default is None.

savefigoutliers_settings (dict, optional) –

Saving options for the outlier plot. The default is None. - “path” must be specified; it can be any existed or non-existed path,

with or without a suffix; if the suffix is not given in “path”, it will follow “format”

”format” can be one of {“pdf”, “eps”, “png”, “ps”}

fig_clusters (bool, optional) – Whether to display the clusters. The default is True.

figsize_clusters (list, optional) – The dimensions of the cluster figures. The default is [10,4].

plotclusters_kwargs (dict, optional) – Arguments for the cluster plot. The default is None.

savefigclusters_settings (dict, optional) –

Saving options for the cluster plot. The default is None. - “path” must be specified; it can be any existed or non-existed path,

with or without a suffix; if the suffix is not given in “path”, it will follow “format”

”format” can be one of {“pdf”, “eps”, “png”, “ps”}

keep_log (Boolean) – if True, adds the previous method parameters to the series log.

- Returns:

ts – A new Series object without outliers if remove is True. Otherwise, returns the original timeseries

- Return type:

See also

pyleoclim.utils.tsutils.detect_outliers_DBSCANOutlier detection using the DBSCAN method

pyleoclim.utils.tsutils.detect_outliers_kmeansOutlier detection using the kmeans method

pyleoclim.utils.tsutils.remove_outliersRemove outliers from the series

Examples

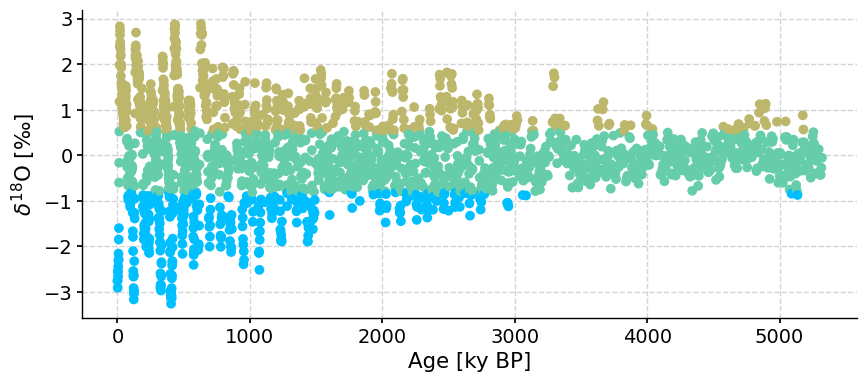

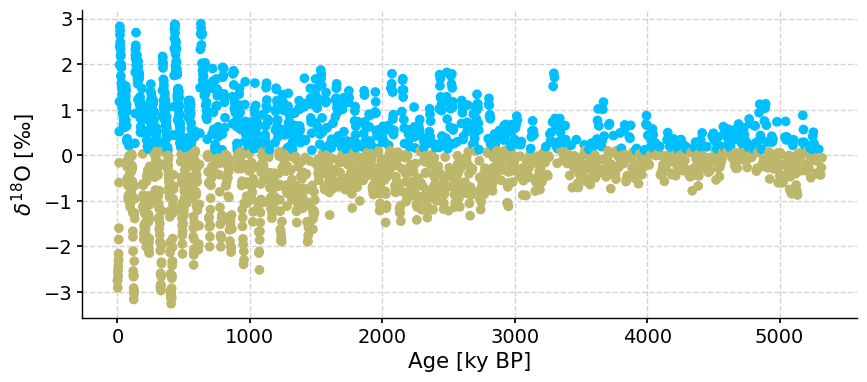

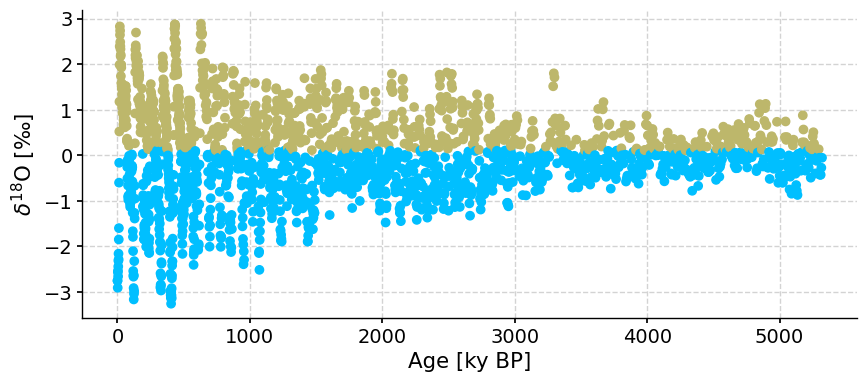

import pyleoclim as pyleo LR04 = pyleo.utils.load_dataset('LR04') LR_out = LR04.detrend().standardize().outliers(method='kmeans')

To set the number of clusters:

LR_out = LR04.detrend().standardize().outliers(method='kmeans', settings={'nbr_clusters':2})

The log contains diagnostic information, to access it, set the keep_log parameter to True:

LR_out = LR04.detrend().standardize().outliers(method='kmeans', settings={'nbr_clusters':2}, keep_log=True)

- plot(figsize=[10, 4], marker=None, markersize=None, color=None, linestyle=None, linewidth=None, xlim=None, ylim=None, label=None, xlabel=None, ylabel=None, title=None, zorder=None, legend=True, plot_kwargs=None, lgd_kwargs=None, alpha=None, savefig_settings=None, ax=None, invert_xaxis=False, invert_yaxis=False)[source]

Plot the timeseries

- Parameters:

figsize (list) – a list of two integers indicating the figure size

marker (str) – e.g., ‘o’ for dots See [matplotlib.markers](https://matplotlib.org/stable/api/markers_api.html) for details

markersize (float) – the size of the marker

color (str, list) – the color for the line plot e.g., ‘r’ for red See [matplotlib colors](https://matplotlib.org/stable/gallery/color/color_demo.html) for details

linestyle (str) – e.g., ‘–’ for dashed line See [matplotlib.linestyles](https://matplotlib.org/stable/gallery/lines_bars_and_markers/linestyles.html) for details

linewidth (float) – the width of the line

label (str) – the label for the line

xlabel (str) – the label for the x-axis

ylabel (str) – the label for the y-axis

title (str) – the title for the figure

zorder (int) – The default drawing order for all lines on the plot

legend ({True, False}) – plot legend or not

invert_xaxis (bool, optional) – if True, the x-axis of the plot will be inverted

invert_yaxis (bool, optional) – same for the y-axis

plot_kwargs (dict) – the dictionary of keyword arguments for ax.plot() See [matplotlib.pyplot.plot](https://matplotlib.org/stable/api/_as_gen/matplotlib.pyplot.plot.html) for details

lgd_kwargs (dict) – the dictionary of keyword arguments for ax.legend() See [matplotlib.pyplot.legend](https://matplotlib.org/stable/api/_as_gen/matplotlib.pyplot.legend.html) for details

alpha (float) – Transparency setting

savefig_settings (dict) –

the dictionary of arguments for plt.savefig(); some notes below: - “path” must be specified; it can be any existed or non-existed path,

with or without a suffix; if the suffix is not given in “path”, it will follow “format”

”format” can be one of {“pdf”, “eps”, “png”, “ps”}

ax (matplotlib.axis, optional) – the axis object from matplotlib See [matplotlib.axes](https://matplotlib.org/api/axes_api.html) for details.

- Returns:

fig (matplotlib.figure) – the figure object from matplotlib See [matplotlib.pyplot.figure](https://matplotlib.org/stable/api/figure_api.html) for details.

ax (matplotlib.axis) – the axis object from matplotlib See [matplotlib.axes](https://matplotlib.org/stable/api/axes_api.html) for details.

Notes

When ax is passed, the return will be ax only; otherwise, both fig and ax will be returned.

See also

pyleoclim.utils.plotting.savefigsaving a figure in Pyleoclim

Examples

Plot the SOI record

import pyleoclim as pyleo ts = pyleo.utils.load_dataset('SOI') fig, ax = ts.plot()

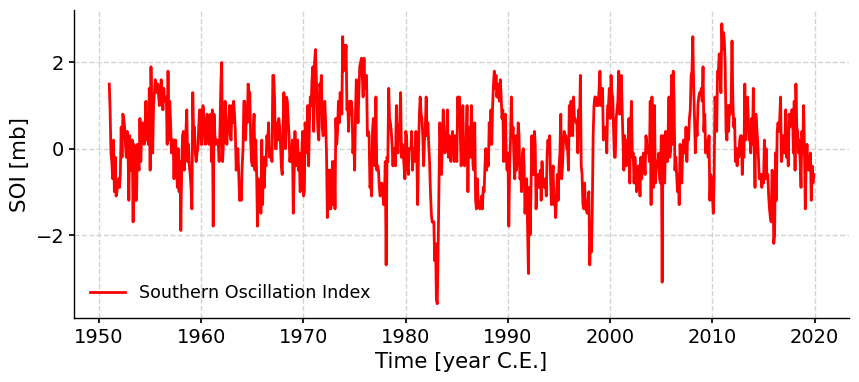

Change the line color

fig, ax = ts.plot(color='r')

- Save the figure. Two options available, only one is needed:

Within the plotting command

After the figure has been generated

fig, ax = ts.plot(color='k', savefig_settings={'path': 'ts_plot3.png'}); pyleo.closefig(fig) pyleo.savefig(fig,path='ts_plot3.png')

Figure saved at: "ts_plot3.png"

Figure saved at: "ts_plot3.png"

- resample(rule, keep_log=False, **kwargs)[source]

Run analogue to pandas.Series.resample.

This is a convenience method: doing

ser.resample(‘AS’).mean()

will do the same thing as

ser.pandas_method(lambda x: x.resample(‘AS’).mean())

but will also accept some extra resampling rules, such as ‘Ga’ (see below).

- Parameters:

rule (str) –

The offset string or object representing target conversion. Can also accept pyleoclim units, such as ‘ka’ (1000 years), ‘Ma’ (1 million years), and ‘Ga’ (1 billion years).

Check the [pandas resample docs](https://pandas.pydata.org/docs/dev/reference/api/pandas.DataFrame.resample.html) for more details.

kwargs (dict) – Any other arguments which will be passed to pandas.Series.resample.

- Returns:

Resampler object, not meant to be used to directly. Instead, an aggregation should be called on it, see examples below.

- Return type:

SeriesResampler

Examples

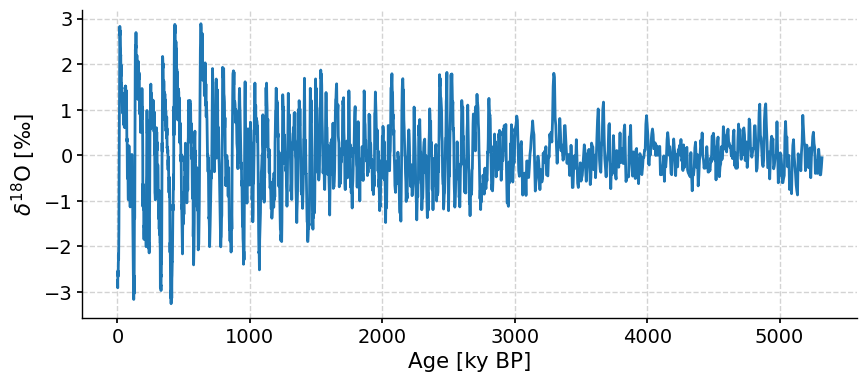

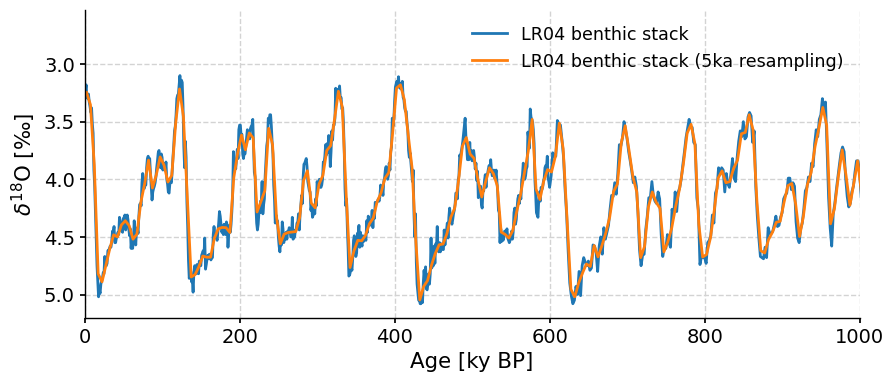

ts = pyleo.utils.load_dataset('LR04') ts5k = ts.resample('5ka').mean() fig, ax = ts.plot(invert_yaxis='True',xlim=[0, 1000]) ts5k.plot(ax=ax,color='C1')

<Axes: xlabel='Age [ky BP]', ylabel='$\\delta^{18} \\mathrm{O}$ [‰]'>

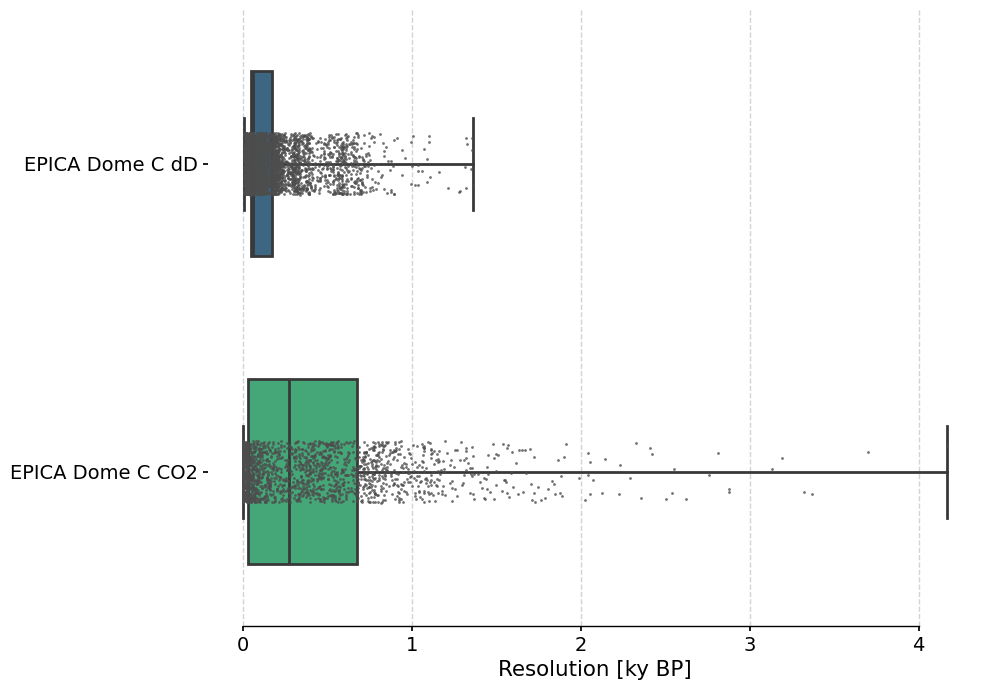

- resolution()[source]

Generate a resolution object

Increments are assigned to the preceding time value. E.g. for time_axis = [0,1,3], resolution.resolution = [1,2] resolution.time = [0,1]

- Returns:

resolution – Resolution object

- Return type:

Resolution

See also

pyleoclim.core.resolutions.ResolutionExamples

To create a resolution object, apply the .resolution() method to a Series object

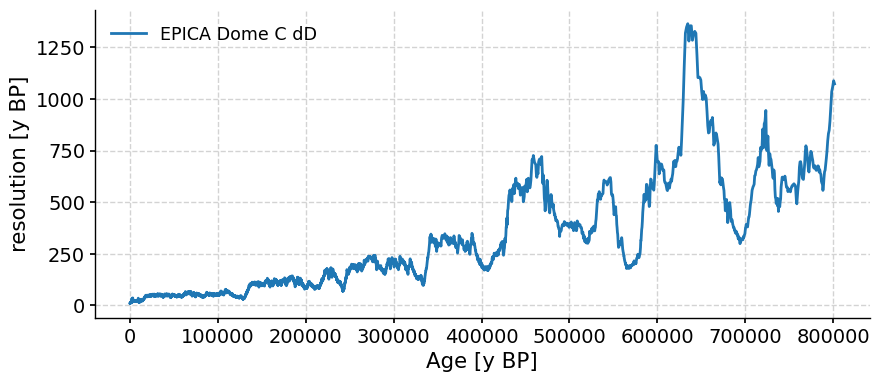

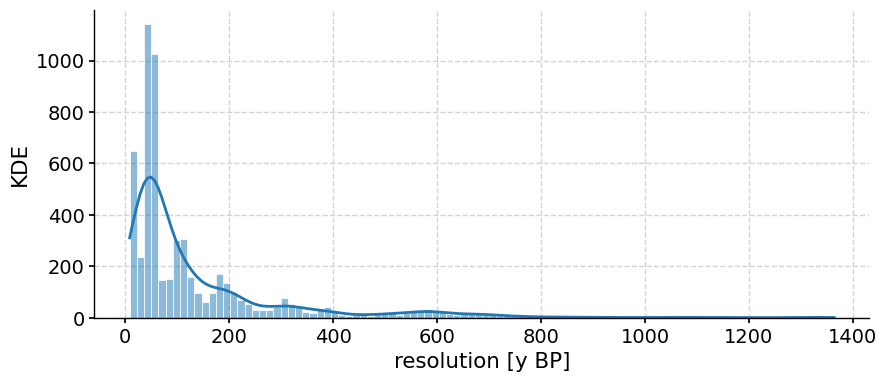

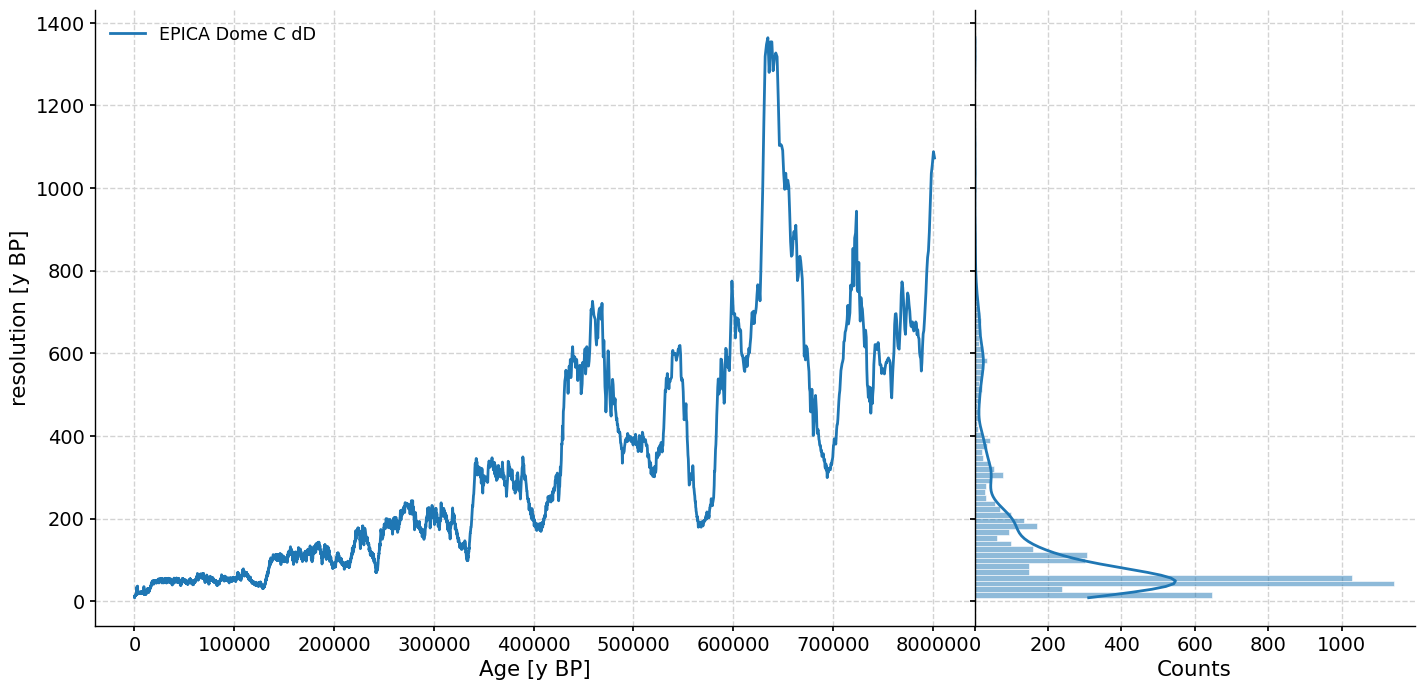

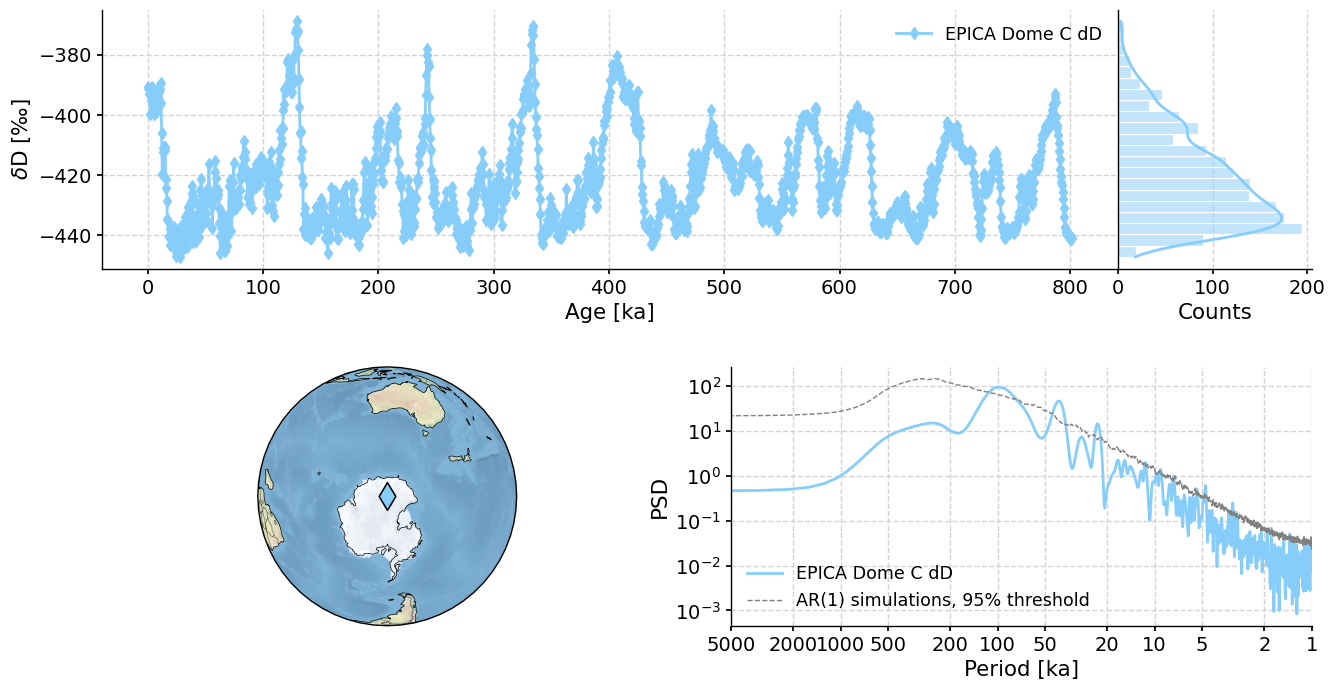

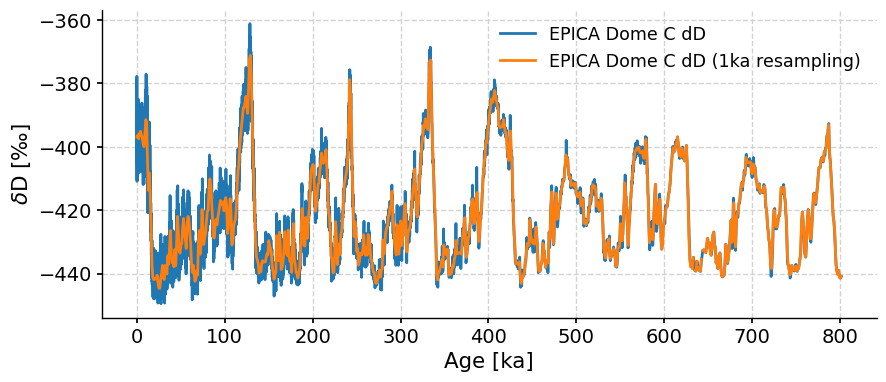

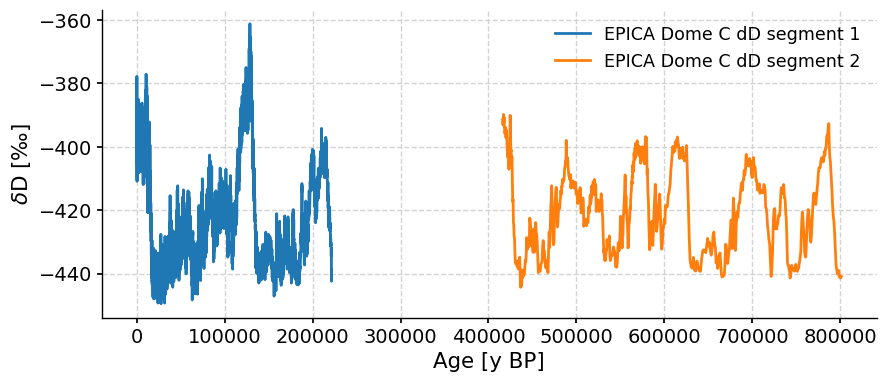

ts = pyleo.utils.load_dataset('EDC-dD') resolution = ts.resolution()

Several methods are then available:

Summary statistics can be obtained via .describe()

resolution.describe()

{'nobs': 5784, 'minmax': (8.244210000000002, 1364.0), 'mean': 138.5932963710235, 'variance': 29806.73648249974, 'skewness': 2.661861461835658, 'kurtosis': 8.705801510819656, 'median': 58.132250000006024}A simple plot can be created using .plot()

resolution.plot()

(<Figure size 1000x400 with 1 Axes>, <Axes: xlabel='Age [y BP]', ylabel='resolution [y BP]'>)

The distribution of resolution

resolution.histplot()

(<Figure size 1000x400 with 1 Axes>, <Axes: xlabel='resolution [y BP]', ylabel='KDE'>)

Or a dashboard combining plot() and histplot() side by side:

resolution.dashboard()

(<Figure size 1100x800 with 2 Axes>, {'res': <Axes: xlabel='Age [y BP]', ylabel='resolution [y BP]'>, 'res_hist': <Axes: xlabel='Counts'>})

- segment(factor=10, verbose=False)[source]

Gap detection

- This function segments a timeseries into n number of parts following a gap

detection algorithm. The rule of gap detection is very simple: we define the intervals between time points as dts, then if dts[i] is larger than factor * dts[i-1], we think that the change of dts (or the gradient) is too large, and we regard it as a breaking point and divide the time series into two segments here

- Parameters:

factor (float) – The factor that adjusts the threshold for gap detection

verbose (bool) – If True, will print warning messages if there is any

- Returns:

res – If gaps were detected, returns the segments in a MultipleSeries object, else, returns the original timeseries.

- Return type:

- sel(value=None, time=None, tolerance=0)[source]

Slice Series based on ‘value’ or ‘time’.

- Parameters:

value (int, float, slice) – If int/float, then the Series will be sliced so that self.value is equal to value (+/- tolerance). If slice, then the Series will be sliced so self.value is between slice.start and slice.stop (+/- tolerance).

time (int, float, slice) – If int/float, then the Series will be sliced so that self.time is equal to time. (+/- tolerance) If slice of int/float, then the Series will be sliced so that self.time is between slice.start and slice.stop. If slice of datetime (or str containing datetime, such as ‘2020-01-01’), then the Series will be sliced so that self.datetime_index is between time.start and time.stop (+/- tolerance, which needs to be a timedelta).

tolerance (int, float, default 0.) – Used by value and time, see above.

- Return type:

Copy of self, sliced according to value and time.

Examples

>>> ts = pyleo.Series( ... time=np.array([1, 1.1, 2, 3]), value=np.array([4, .9, 6, 1]), time_unit='years BP' ... ) >>> ts.sel(value=1) {'log': ({0: 'clean_ts', 'applied': True, 'verbose': False}, {2: 'clean_ts', 'applied': True, 'verbose': False})}

None time [years BP] 3.0 1.0 Name: value, dtype: float64

If you also want to include the value 3.9, you could set tolerance to .1:

>>> ts.sel(value=1, tolerance=.1) {'log': ({0: 'clean_ts', 'applied': True, 'verbose': False}, {2: 'clean_ts', 'applied': True, 'verbose': False})}

None time [years BP] 1.1 0.9 3.0 1.0 Name: value, dtype: float64

You can also pass a slice to select a range of values:

>>> ts.sel(value=slice(4, 6)) {'log': ({0: 'clean_ts', 'applied': True, 'verbose': False}, {2: 'clean_ts', 'applied': True, 'verbose': False})}

None time [years BP] 1.0 4.0 2.0 6.0 Name: value, dtype: float64

>>> ts.sel(value=slice(4, None)) {'log': ({0: 'clean_ts', 'applied': True, 'verbose': False}, {2: 'clean_ts', 'applied': True, 'verbose': False})}

None time [years BP] 1.0 4.0 2.0 6.0 Name: value, dtype: float64

>>> ts.sel(value=slice(None, 4)) {'log': ({0: 'clean_ts', 'applied': True, 'verbose': False}, {2: 'clean_ts', 'applied': True, 'verbose': False})}

None time [years BP] 1.0 4.0 1.1 0.9 3.0 1.0 Name: value, dtype: float64

Similarly, you filter using time instead of value.

- slice(timespan)[source]

Slicing the timeseries with a timespan (tuple or list)

- Parameters:

timespan (tuple or list) – The list of time points for slicing, whose length must be even. When there are n time points, the output Series includes n/2 segments. For example, if timespan = [a, b], then the sliced output includes one segment [a, b]; if timespan = [a, b, c, d], then the sliced output includes segment [a, b] and segment [c, d].

- Returns:

new – The sliced Series object.

- Return type:

Examples

slice the SOI from 1972 to 1998

import pyleoclim as pyleo ts = pyleo.utils.load_dataset('SOI') ts_slice = ts.slice([1972, 1998]) print("New time bounds:",ts_slice.time.min(),ts_slice.time.max())

New time bounds: 1972.0 1998.0

- sort(verbose=False, ascending=True, keep_log=False)[source]

- Ensure timeseries is set to a monotonically increasing axis.

If the time axis is prograde to begin with, no transformation is applied.

- Parameters:

verbose (bool) – If True, will print warning messages if there is any

keep_log (Boolean) – if True, adds this step and its parameter to the series log.

- Returns:

new – Series object with removed NaNs and sorting

- Return type:

- spectral(method='lomb_scargle', freq_method='log', freq_kwargs=None, settings=None, label=None, scalogram=None, verbose=False)[source]

Perform spectral analysis on the timeseries

- Parameters:

method (str;) – {‘wwz’, ‘mtm’, ‘lomb_scargle’, ‘welch’, ‘periodogram’, ‘cwt’}

freq_method (str) – {‘log’,’scale’, ‘nfft’, ‘lomb_scargle’, ‘welch’}

freq_kwargs (dict) – Arguments for frequency vector

settings (dict) – Arguments for the specific spectral method

label (str) – Label for the PSD object

scalogram (pyleoclim.core.series.Series.Scalogram) – The return of the wavelet analysis; effective only when the method is ‘wwz’ or ‘cwt’

verbose (bool) – If True, will print warning messages if there is any

- Returns:

psd – A PSD object

- Return type:

See also

pyleoclim.utils.spectral.mtmSpectral analysis using the Multitaper approach

pyleoclim.utils.spectral.lomb_scargleSpectral analysis using the Lomb-Scargle method

pyleoclim.utils.spectral.welchSpectral analysis using the Welch segement approach

pyleoclim.utils.spectral.periodogramSpectral anaysis using the basic Fourier transform

pyleoclim.utils.spectral.wwz_psdSpectral analysis using the Wavelet Weighted Z transform

pyleoclim.utils.spectral.cwt_psdSpectral analysis using the continuous Wavelet Transform as implemented by Torrence and Compo

pyleoclim.utils.spectral.make_freq_vectorFunctions to create the frequency vector

pyleoclim.utils.tsutils.detrendDetrending function

pyleoclim.core.psds.PSDPSD object

pyleoclim.core.psds.MultiplePSDMultiple PSD object

Examples

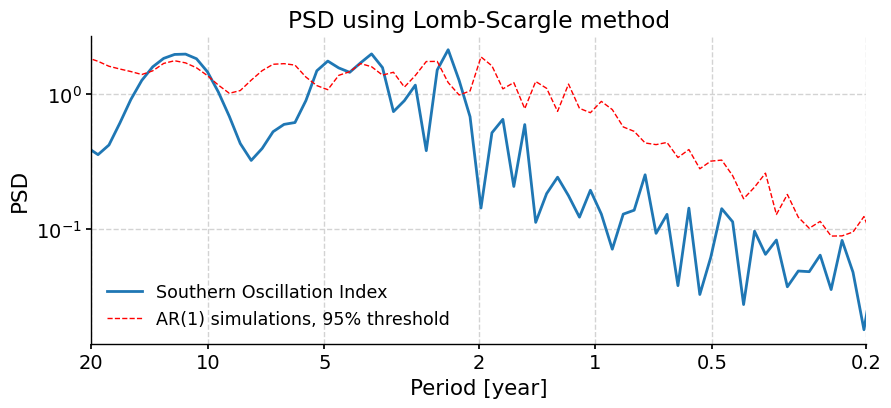

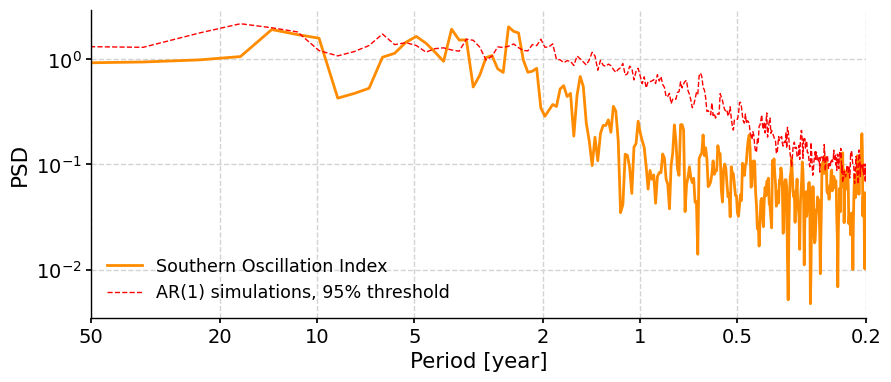

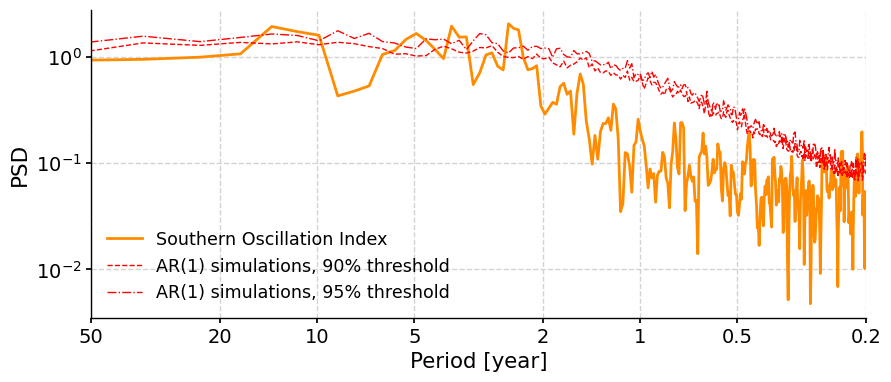

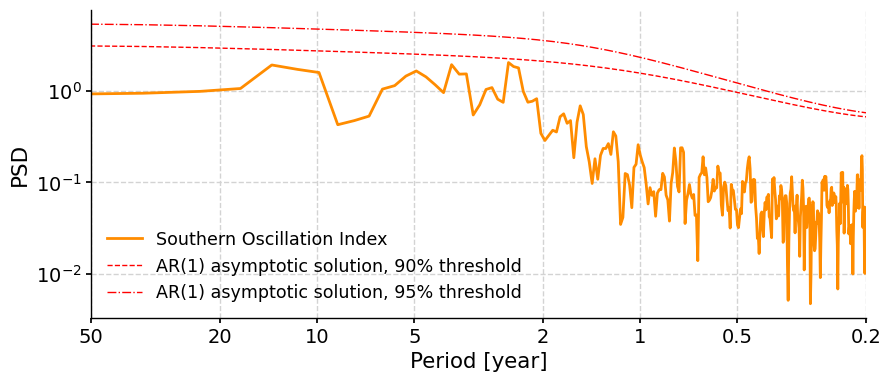

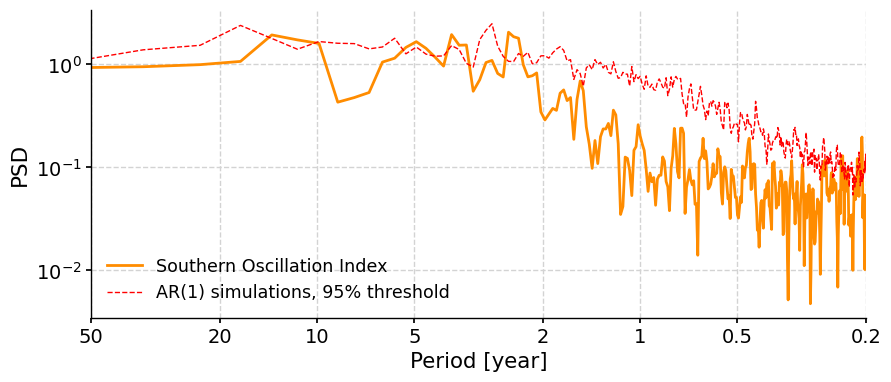

Calculate the spectrum of SOI using the various methods and compute significance

import pyleoclim as pyleo ts = pyleo.utils.load_dataset('SOI') ts_std = ts.standardize()

Lomb-Scargle

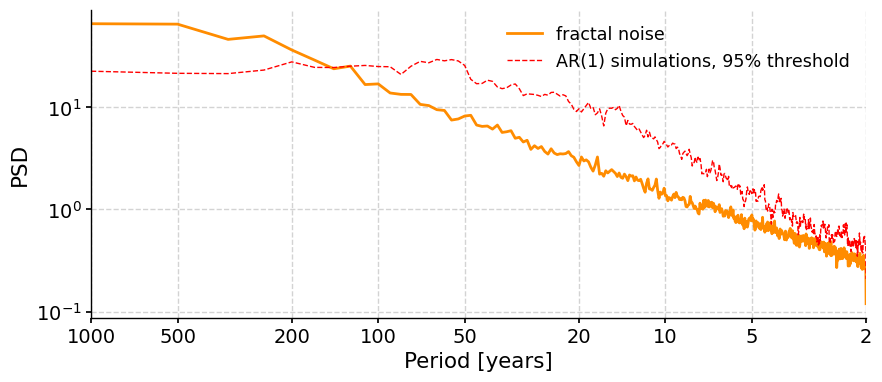

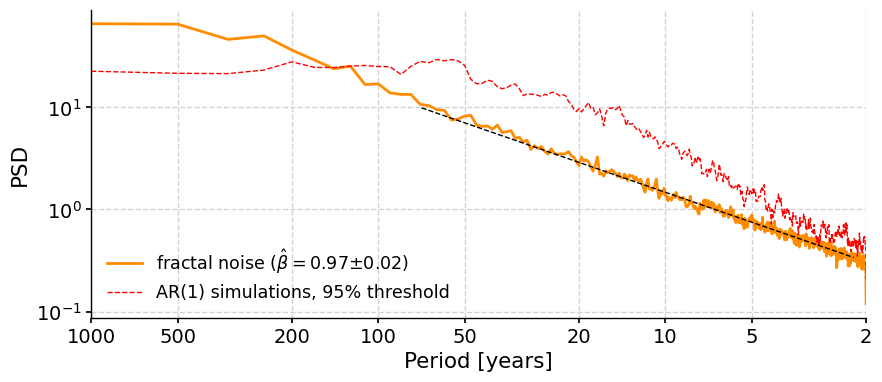

psd_ls = ts_std.spectral(method='lomb_scargle') psd_ls_signif = psd_ls.signif_test(number=20) #in practice, need more AR1 simulations fig, ax = psd_ls_signif.plot(title='PSD using Lomb-Scargle method')

We may pass in method-specific arguments via “settings”, which is a dictionary. For instance, to adjust the number of overlapping segment for Lomb-Scargle, we may specify the method-specific argument “n50”; to adjust the frequency vector, we may modify the “freq_method” or modify the method-specific argument “freq”.

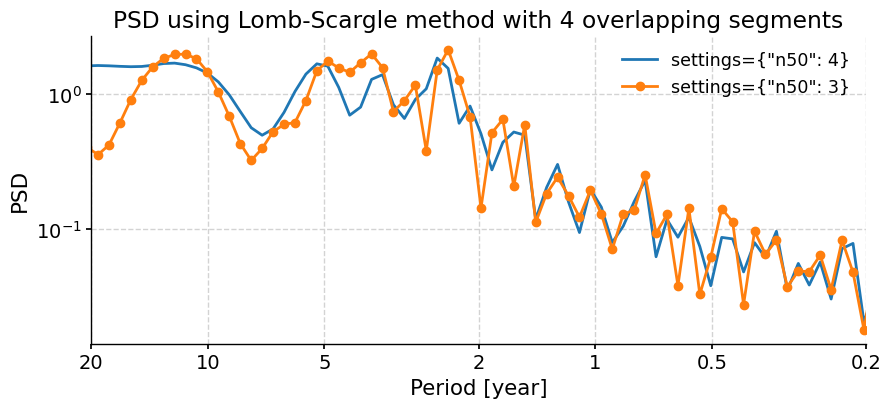

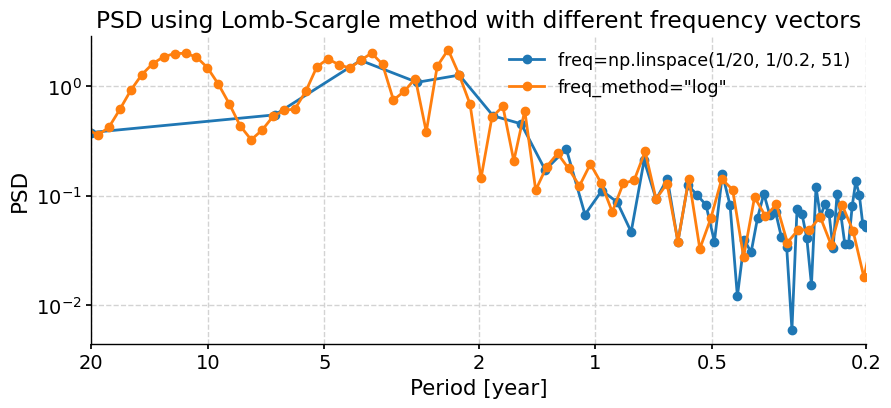

import numpy as np psd_LS_n50 = ts_std.spectral(method='lomb_scargle', settings={'n50': 4}) # c=1e-2 yields lower frequency resolution psd_LS_freq = ts_std.spectral(method='lomb_scargle', settings={'freq': np.linspace(1/20, 1/0.2, 51)}) psd_LS_LS = ts_std.spectral(method='lomb_scargle', freq_method='lomb_scargle') # with frequency vector generated using REDFIT method fig, ax = psd_LS_n50.plot( title='PSD using Lomb-Scargle method with 4 overlapping segments', label='settings={"n50": 4}') psd_ls.plot(ax=ax, label='settings={"n50": 3}', marker='o') fig, ax = psd_LS_freq.plot( title='PSD using Lomb-Scargle method with different frequency vectors', label='freq=np.linspace(1/20, 1/0.2, 51)', marker='o') psd_ls.plot(ax=ax, label='freq_method="log"', marker='o')

<Axes: title={'center': 'PSD using Lomb-Scargle method with different frequency vectors'}, xlabel='Period [year]', ylabel='PSD'>

You may notice the differences in the PSD curves regarding smoothness and the locations of the analyzed period points.

For other method-specific arguments, please look up the specific methods in the “See also” section.

WWZ

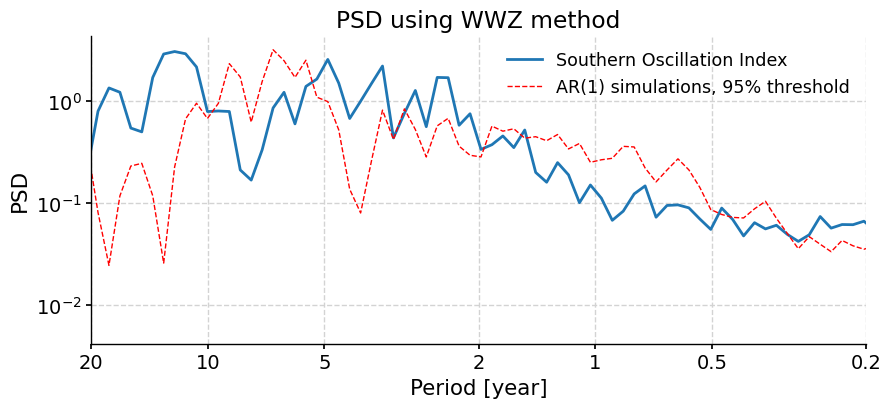

psd_wwz = ts_std.spectral(method='wwz') # wwz is the default method psd_wwz_signif = psd_wwz.signif_test(number=1) # significance test; for real work, should use number=200 or even larger fig, ax = psd_wwz_signif.plot(title='PSD using WWZ method')

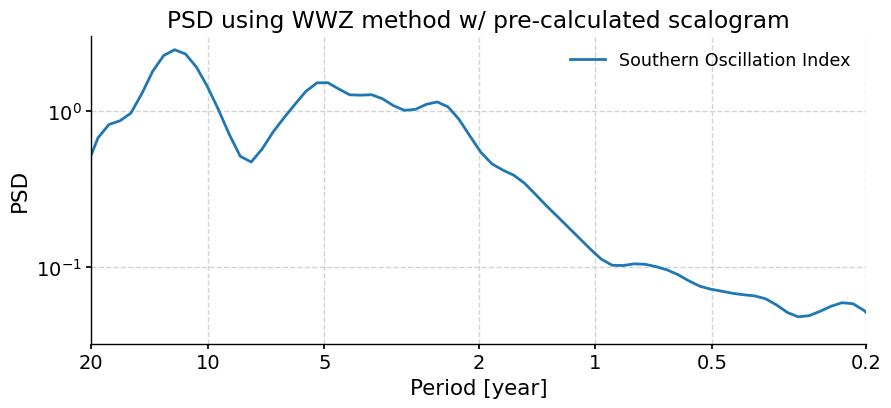

We may take advantage of a pre-calculated scalogram using WWZ to accelerate the spectral analysis (although note that the default parameters for spectral and wavelet analysis using WWZ are different):

scal_wwz = ts_std.wavelet(method='wwz') # wwz is the default method psd_wwz_fast = ts_std.spectral(method='wwz', scalogram=scal_wwz) fig, ax = psd_wwz_fast.plot(title='PSD using WWZ method w/ pre-calculated scalogram')

Periodogram

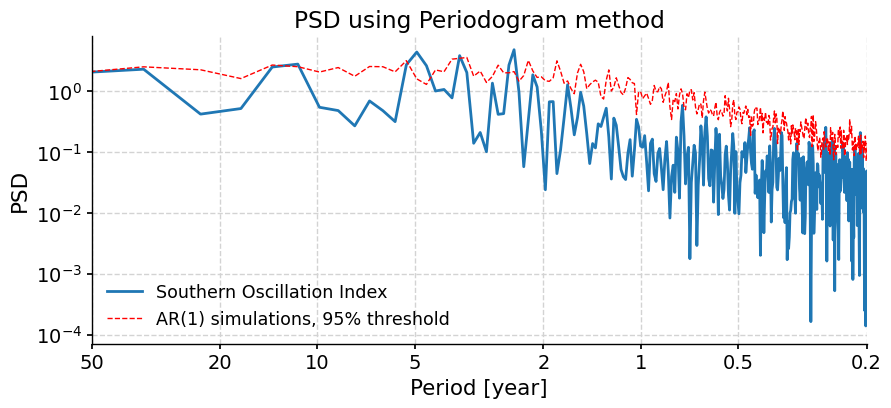

ts_interp = ts_std.interp() psd_perio = ts_interp.spectral(method='periodogram') psd_perio_signif = psd_perio.signif_test(number=20, method='ar1sim') #in practice, need more AR1 simulations fig, ax = psd_perio_signif.plot(title='PSD using Periodogram method')

Welch

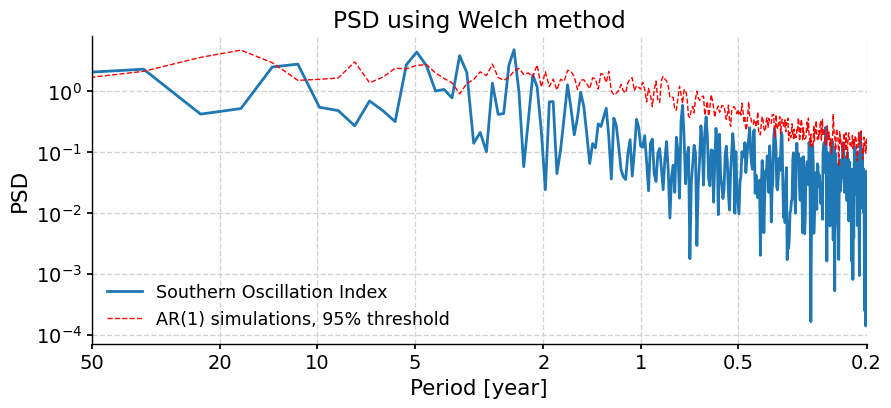

psd_welch = ts_interp.spectral(method='welch') psd_welch_signif = psd_welch.signif_test(number=20, method='ar1sim') #in practice, need more AR1 simulations fig, ax = psd_welch_signif.plot(title='PSD using Welch method')

MTM

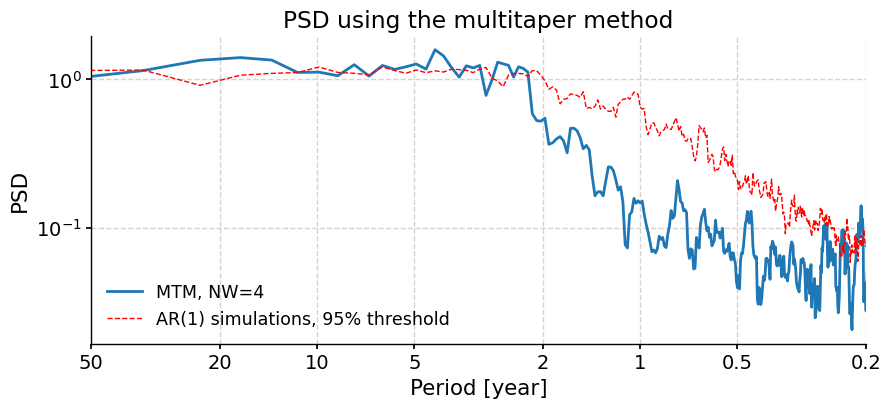

psd_mtm = ts_interp.spectral(method='mtm', label='MTM, NW=4') psd_mtm_signif = psd_mtm.signif_test(number=20, method='ar1sim') #in practice, need more AR1 simulations fig, ax = psd_mtm_signif.plot(title='PSD using the multitaper method')

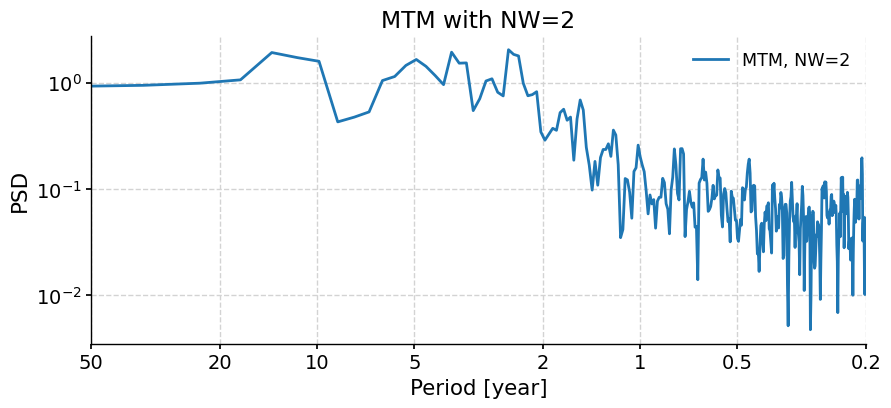

By default, MTM uses a half-bandwidth of 4 times the fundamental (Rayleigh) frequency, i.e. NW = 4, which is the most conservative choice. NW runs from 2 to 4 in multiples of 1/2, and can be adjusted like so (note the sharper peaks and higher overall variance, which may not be desirable):

psd_mtm2 = ts_interp.spectral(method='mtm', settings={'NW':2}, label='MTM, NW=2') fig, ax = psd_mtm2.plot(title='MTM with NW=2')

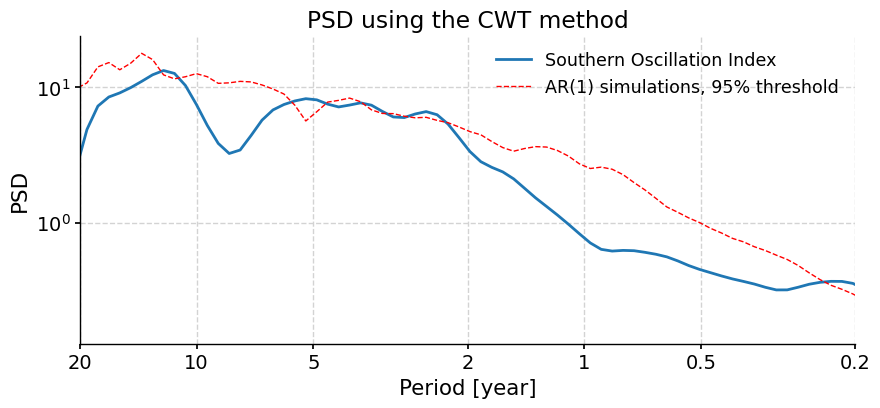

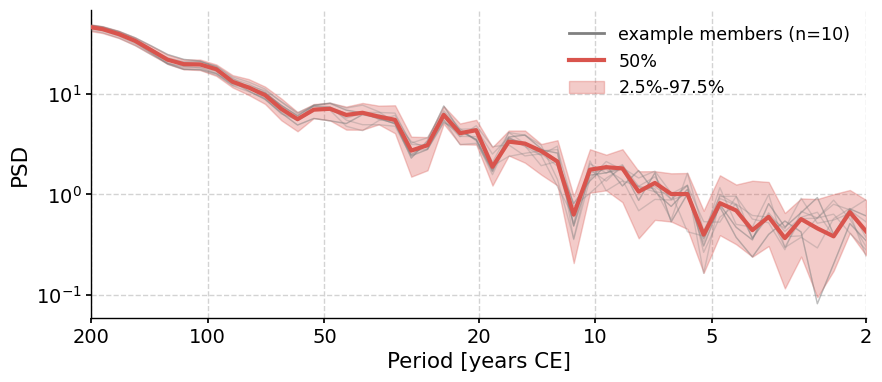

Continuous Wavelet Transform

ts_interp = ts_std.interp() psd_cwt = ts_interp.spectral(method='cwt') psd_cwt_signif = psd_cwt.signif_test(number=20) fig, ax = psd_cwt_signif.plot(title='PSD using the CWT method')

- ssa(M=None, nMC=0, f=0.3, trunc=None, var_thresh=80, online=True)[source]

Singular Spectrum Analysis

Nonparametric, orthogonal decomposition of timeseries into constituent oscillations. This implementation uses the method of [1], with applications presented in [2]. Optionally (MC>0), the significance of eigenvalues is assessed by Monte-Carlo simulations of an AR(1) model fit to X, using [3]. The method expects regular spacing, but is tolerant to missing values, up to a fraction 0<f<1 (see [4]).

- Parameters:

M (int, optional) – window size. The default is None (10% of the length of the series).

MC (int, optional) – Number of iteration in the Monte-Carlo process. The default is 0.

f (float, optional) – maximum allowable fraction of missing values. The default is 0.3.

trunc (str) –

- if present, truncates the expansion to a level K < M owing to one of 4 criteria:

’kaiser’: variant of the Kaiser-Guttman rule, retaining eigenvalues larger than the median

’mcssa’: Monte-Carlo SSA (use modes above the 95% quantile from an AR(1) process)

’var’: first K modes that explain at least var_thresh % of the variance.

- Default is None, which bypasses truncation (K = M)

’knee’: Wherever the “knee” of the screeplot occurs.

Recommended as a first pass at identifying significant modes as it tends to be more robust than ‘kaiser’ or ‘var’, and faster than ‘mcssa’. While no truncation method is imposed by default, if the goal is to enhance the S/N ratio and reconstruct a smooth version of the attractor’s skeleton, then the knee-finding method is a good compromise between objectivity and efficiency. See kneed’s documentation for more details on the knee finding algorithm.

var_thresh (float) – variance threshold for reconstruction (only impactful if trunc is set to ‘var’)

online (bool; {True,False}) –

Whether or not to conduct knee finding analysis online or offline. Only called when trunc = ‘knee’. Default is True See kneed’s documentation for details.

- Returns:

res (object of the SsaRes class containing:)

eigvals ((M, ) array of eigenvalues)

eigvecs ((M, M) Matrix of temporal eigenvectors (T-EOFs))

PC ((N - M + 1, M) array of principal components (T-PCs))

RCmat ((N, M) array of reconstructed components)

RCseries ((N,) reconstructed series, with mean and variance restored (same type as original))

pctvar ((M, ) array of the fraction of variance (%) associated with each mode)

eigvals_q ((M, 2) array contaitning the 5% and 95% quantiles of the Monte-Carlo eigenvalue spectrum [ if nMC >0 ])

References

[1]_ Vautard, R., and M. Ghil (1989), Singular spectrum analysis in nonlinear dynamics, with applications to paleoclimatic time series, Physica D, 35, 395–424.

[2]_ Ghil, M., R. M. Allen, M. D. Dettinger, K. Ide, D. Kondrashov, M. E. Mann, A. Robertson, A. Saunders, Y. Tian, F. Varadi, and P. Yiou (2002), Advanced spectral methods for climatic time series, Rev. Geophys., 40(1), 1003–1052, doi:10.1029/2000RG000092.

[3]_ Allen, M. R., and L. A. Smith (1996), Monte Carlo SSA: Detecting irregular oscillations in the presence of coloured noise, J. Clim., 9, 3373–3404.

[4]_ Schoellhamer, D. H. (2001), Singular spectrum analysis for time series with missing data, Geophysical Research Letters, 28(16), 3187–3190, doi:10.1029/2000GL012698.

See also

pyleoclim.core.utils.decomposition.ssaSingular Spectrum Analysis utility

pyleoclim.core.ssares.SsaRes.modeplotplot SSA modes

pyleoclim.core.ssares.SsaRes.screeplotplot SSA eigenvalue spectrum

Examples

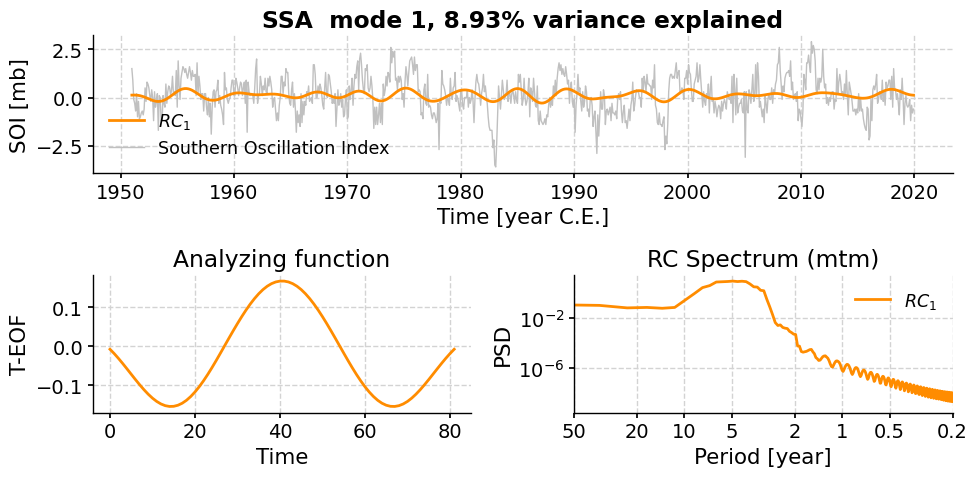

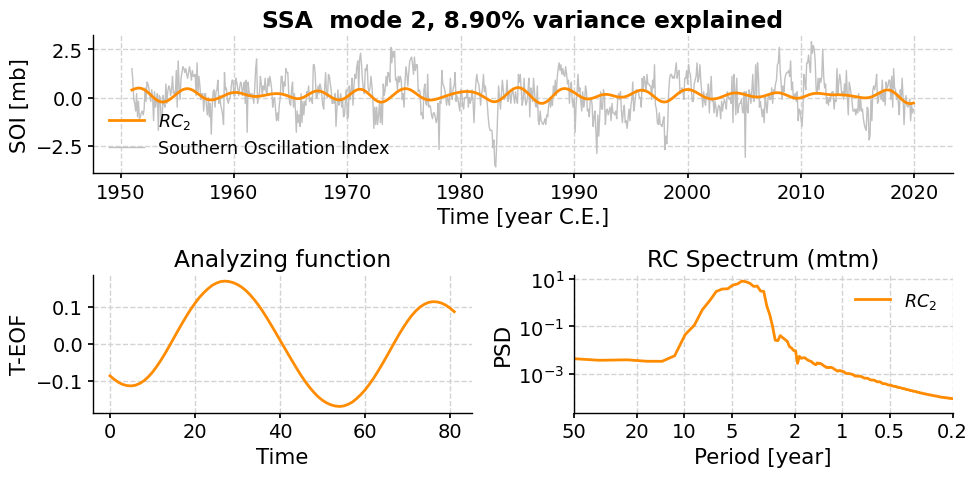

SSA with SOI

import pyleoclim as pyleo ts = pyleo.utils.load_dataset('SOI') fig, ax = ts.plot() nino_ssa = ts.ssa(M=60)

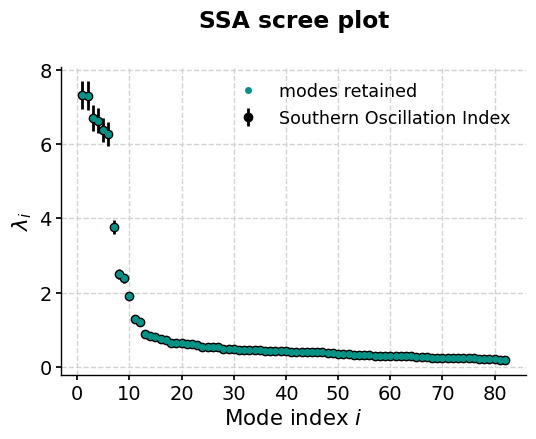

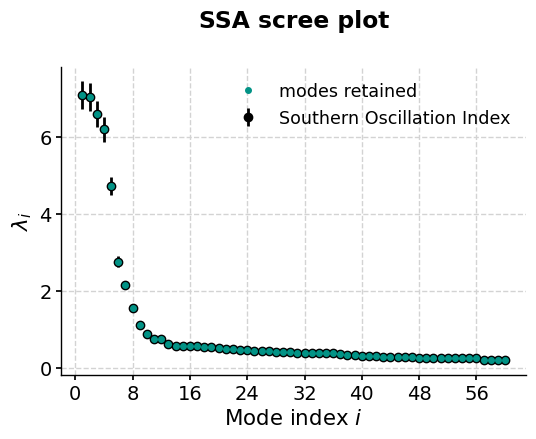

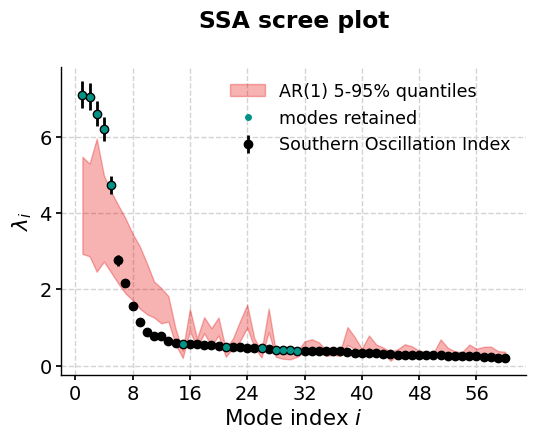

Let us now see how to make use of all these arrays. The first step is to inspect the eigenvalue spectrum (“scree plot”) to identify remarkable modes. Let us restrict ourselves to the first 40, so we can see something:

fig, ax = nino_ssa.screeplot()

- This highlights a few common phenomena with SSA:

the eigenvalues are in descending order

their uncertainties are proportional to the eigenvalues themselves

the eigenvalues tend to come in pairs : (1,2) (3,4), are all clustered within uncertainties . (5,6) looks like another doublet

around i=15, the eigenvalues appear to reach a floor, and all subsequent eigenvalues explain a very small amount of variance.

So, summing the variance of the first 14 modes, we get:

print(nino_ssa.pctvar[:14].sum())

71.61676734218962

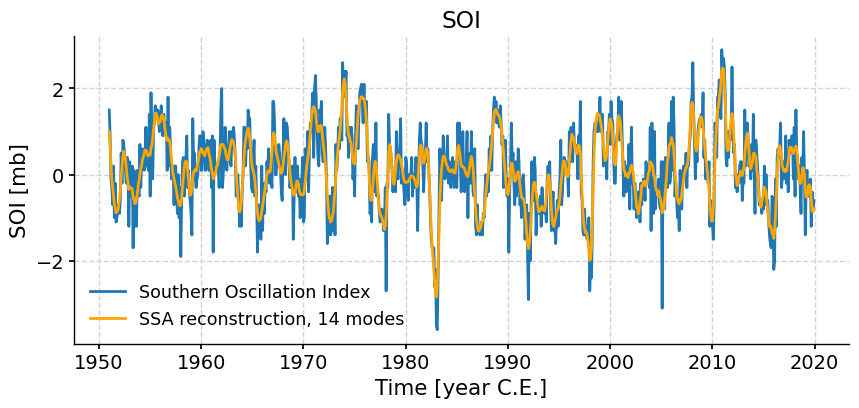

That is a typical result for a (paleo)climate timeseries; a few modes do the vast majority of the work. That means we can focus our attention on these modes and capture most of the interesting behavior. To see this, let’s use the reconstructed components (RCs), and sum the RC matrix over the first 14 columns:

RCmat = nino_ssa.RCmat[:,:14] RCk = (RCmat-RCmat.mean()).sum(axis=1) + ts.value.mean() fig, ax = ts.plot(title='SOI') ax.plot(nino_ssa.orig.time,RCk,label='SSA reconstruction, 14 modes',color='orange') ax.legend()

<matplotlib.legend.Legend at 0x7f278237e150>

- Indeed, these first few modes capture the vast majority of the low-frequency behavior, including all the El Niño/La Niña events. What is left (the blue wiggles not captured in the orange curve) are high-frequency oscillations that might be considered “noise” from the standpoint of ENSO dynamics. This illustrates how SSA might be used for filtering a timeseries. One must be careful however:

there was not much rhyme or reason for picking 14 modes. Why not 5, or 39? All we have seen so far is that they gather >95% of the variance, which is by no means a magic number.

there is no guarantee that the first few modes will filter out high-frequency behavior, or at what frequency cutoff they will do so. If you need to cut out specific frequencies, you are better off doing it with a classical filter, like the butterworth filter implemented in Pyleoclim. However, in many instances the choice of a cutoff frequency is itself rather arbitrary. In such cases, SSA provides a principled alternative for generating a version of a timeseries that preserves features and excludes others (i.e, a filter).

as with all orthgonal decompositions, summing over all RCs will recover the original signal within numerical precision.

Monte-Carlo SSA

Selecting meaningful modes in eigenproblems (e.g. EOF analysis) is more art than science. However, one technique stands out: Monte Carlo SSA, introduced by Allen & Smith, (1996) to identify SSA modes that rise above what one would expect from “red noise”, specifically an AR(1) process). To run it, simply provide the parameter MC, ideally with a number of iterations sufficient to get decent statistics. Here let’s use MC = 1000. The result will be stored in the eigval_q array, which has the same length as eigval, and its two columns contain the 5% and 95% quantiles of the ensemble of MC-SSA eigenvalues.

nino_mcssa = ts.ssa(M = 60, nMC=1000)

Now let’s look at the result:

fig, ax = nino_mcssa.screeplot() print('Indices of modes retained: '+ str(nino_mcssa.mode_idx))

Indices of modes retained: [ 0 1 2 3 4 14 20 25 27 28 29 30]

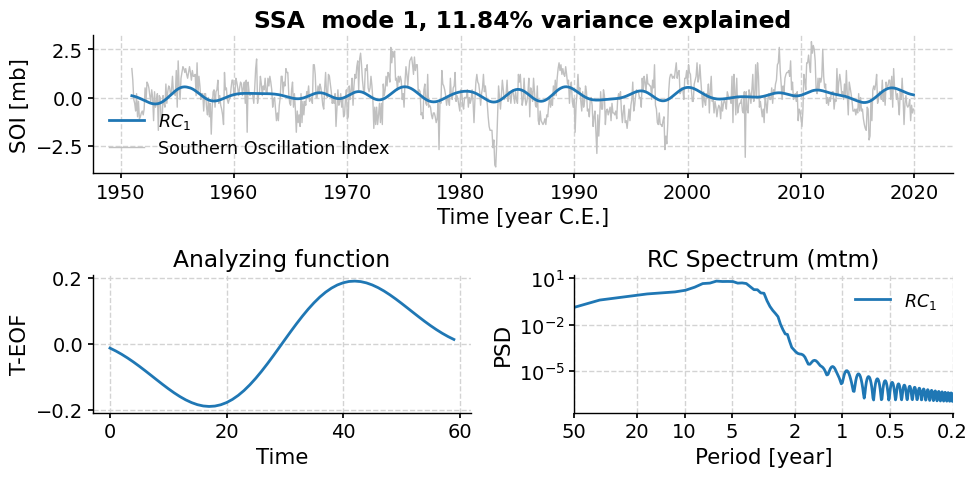

This suggests that modes 1-5 fall above the red noise benchmark, but so do a few others. To inspect mode 1 (index 0), just type:

fig, ax = nino_mcssa.modeplot(index=0)

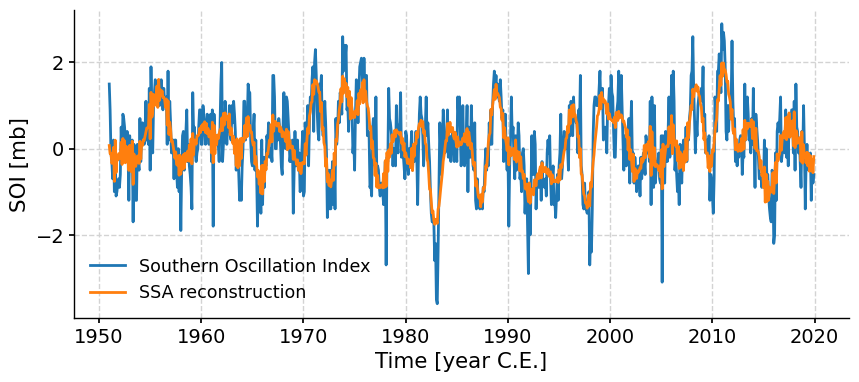

To inspect the reconstructed series, simply do:

fig, ax = ts.plot() nino_mcssa.RCseries.plot(ax=ax)

<Axes: xlabel='Time [year C.E.]', ylabel='SOI [mb]'>

For other truncation methods, see http://linked.earth/PyleoTutorials/notebooks/L2_singular_spectrum_analysis.html

- standardize(keep_log=False, scale=1)[source]

Standardizes the series ((i.e. remove its estimated mean and divides by its estimated standard deviation)

- Returns:

new (Series) – The standardized series object

keep_log (Boolean) – if True, adds the previous mean, standard deviation and method parameters to the series log.

- stats()[source]

Compute basic statistics from a Series

Computes the mean, median, min, max, standard deviation, and interquartile range of a numpy array y, ignoring NaNs.

- Returns:

res – Contains the mean, median, minimum value, maximum value, standard deviation, and interquartile range for the Series.

- Return type:

dictionary

Examples

Compute basic statistics for the SOI series

import pyleoclim as pyleo ts = pyleo.utils.load_dataset('SOI') ts.stats()

{'mean': 0.11992753623188407, 'median': 0.1, 'min': -3.6, 'max': 2.9, 'std': 0.9380195472790024, 'IQR': 1.3}

- stripes(figsize=[8, 1], cmap='RdBu_r', ref_period=None, sat=1.0, top_label=None, bottom_label=None, label_color='gray', label_size=None, xlim=None, xlabel=None, savefig_settings=None, ax=None, invert_xaxis=False, show_xaxis=False, x_offset=0.03)[source]

Represents the Series as an Ed Hawkins “stripes” pattern

Credit: https://matplotlib.org/matplotblog/posts/warming-stripes/

- Parameters:

ref_period (array-like (2-elements)) – dates of the reference period, in the form “(first, last)”

figsize (list) – a list of two integers indicating the figure size (in inches)

cmap (str) – colormap name (https://matplotlib.org/stable/tutorials/colors/colormaps.html)

sat (float > 0) – Controls the saturation of the colormap normalization by scaling the vmin, vmax in https://matplotlib.org/stable/tutorials/colors/colormapnorms.html default = 0.9

xlim (list) – time axis limits

top_label (str) – the “title” label for the stripe

bottom_label (str) – the “ylabel” explaining which variable is being plotted

invert_xaxis (bool, optional) – if True, the x-axis of the plot will be inverted

x_offset (float) – value controlling the horizontal offset between stripes and labels (default = 0.05)

show_xaxis (bool) – flag indicating whether or not the x-axis should be shown (default = False)

savefig_settings (dict) –

the dictionary of arguments for plt.savefig(); some notes below: - “path” must be specified; it can be any existed or non-existed path,

with or without a suffix; if the suffix is not given in “path”, it will follow “format”

”format” can be one of {“pdf”, “eps”, “png”, “ps”}

ax (matplotlib.axis, optional) – the axis object from matplotlib See [matplotlib.axes](https://matplotlib.org/api/axes_api.html) for details.

- Returns:

fig (matplotlib.figure) – the figure object from matplotlib See [matplotlib.pyplot.figure](https://matplotlib.org/stable/api/figure_api.html) for details.

ax (matplotlib.axis) – the axis object from matplotlib See [matplotlib.axes](https://matplotlib.org/stable/api/axes_api.html) for details.

Notes

When ax is passed, the return will be ax only; otherwise, both fig and ax will be returned.

See also

pyleoclim.utils.plotting.stripesstripes representation of a timeseries

pyleoclim.utils.plotting.savefigsaving a figure in Pyleoclim

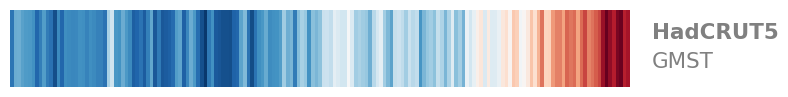

Examples

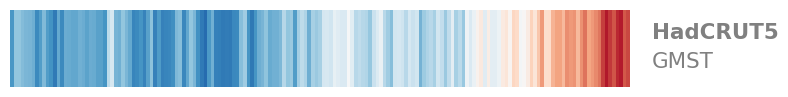

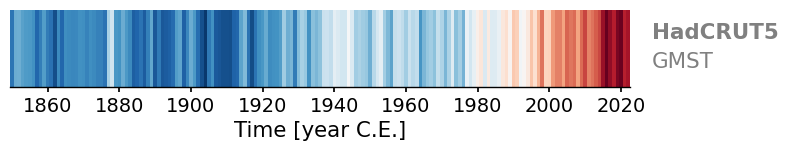

Plot the HadCRUT5 Global Mean Surface Temperature

gmst = pyleo.utils.load_dataset('HadCRUT5') fig, ax = gmst.stripes(ref_period=(1971,2000))

For a more pastel tone, dial down saturation:

fig, ax = gmst.stripes(ref_period=(1971,2000), sat = 0.8)

To change the colormap:

fig, ax = gmst.stripes(ref_period=(1971,2000), cmap='Spectral_r') fig, ax = gmst.stripes(ref_period=(1971,2000), cmap='magma_r')

To show the time axis:

fig, ax = gmst.stripes(ref_period=(1971,2000), show_xaxis=True)

- summary_plot(psd, scalogram, figsize=[8, 10], title=None, time_lim=None, value_lim=None, period_lim=None, psd_lim=None, time_label=None, value_label=None, period_label=None, psd_label=None, ts_plot_kwargs=None, wavelet_plot_kwargs=None, psd_plot_kwargs=None, gridspec_kwargs=None, y_label_loc=None, legend=None, savefig_settings=None)[source]

Produce summary plot of timeseries.

Generate cohesive plot of timeseries alongside results of wavelet analysis and spectral analysis on said timeseries. Requires wavelet and spectral analysis to be conducted outside of plotting function, psd and scalogram must be passed as arguments.

- Parameters:

psd (PSD) – the PSD object of a Series.

scalogram (Scalogram) – the Scalogram object of a Series. If the passed scalogram object contains stored signif_scals these will be plotted.

figsize (list) – a list of two integers indicating the figure size

title (str) – the title for the figure

time_lim (list or tuple) – the limitation of the time axis. This is for display purposes only, the scalogram and psd will still be calculated using the full time series.

value_lim (list or tuple) – the limitation of the value axis of the timeseries. This is for display purposes only, the scalogram and psd will still be calculated using the full time series.

period_lim (list or tuple) – the limitation of the period axis

psd_lim (list or tuple) – the limitation of the psd axis

time_label (str) – the label for the time axis

value_label (str) – the label for the value axis of the timeseries

period_label (str) – the label for the period axis

psd_label (str) – the label for the amplitude axis of PDS

legend (bool) – if set to True, a legend will be added to the open space above the psd plot

ts_plot_kwargs (dict) – arguments to be passed to the timeseries subplot, see Series.plot for details

wavelet_plot_kwargs (dict) – arguments to be passed to the scalogram plot, see pyleoclim.Scalogram.plot for details

psd_plot_kwargs (dict) –

arguments to be passed to the psd plot, see PSD.plot for details Certain psd plot settings are required by summary plot formatting. These include:

ylabel

legend

tick parameters

These will be overriden by summary plot to prevent formatting errors

gridspec_kwargs (dict) –

arguments used to build the specifications for gridspec configuration The plot is constructed with six slots:

slot [0] contains a subgridspec containing the timeseries and scalogram (shared x axis)

slot [1] contains a subgridspec containing an empty slot and the PSD plot (shared y axis with scalogram)

slot [2] and slot [3] are empty to allow ample room for xlabels for the scalogram and PSD plots

slot [4] contains the scalogram color bar

slot [5] is empty

It is possible to tune the size and spacing of the various slots:

’width_ratios’: list of two values describing the relative widths of the column containig the timeseries/scalogram/colorbar and the column containig the PSD plot (default: [6, 1])

’height_ratios’: list of three values describing the relative heights of the three timeseries, scalogram and colorbar (default: [2, 7, .35])

’hspace’: vertical space between timeseries and scalogram (default: 0, however if either the scalogram xlabel or the PSD xlabel contain ‘n’, .05)

’wspace’: lateral space between scalogram and psd plot (default: 0)

’cbspace’: vertical space between the scalogram and colorbar

y_label_loc (float) – Plot parameter to adjust horizontal location of y labels to avoid conflict with axis labels, default value is -0.15

savefig_settings (dict) –

the dictionary of arguments for plt.savefig(); some notes below: - “path” must be specified; it can be any existed or non-existed path,

with or without a suffix; if the suffix is not given in “path”, it will follow “format”

”format” can be one of {“pdf”, “eps”, “png”, “ps”}

See also

pyleoclim.core.series.Series.spectralSpectral analysis for a timeseries

pyleoclim.core.series.Series.waveletWavelet analysis for a timeseries

pyleoclim.utils.plotting.savefigsaving figure in Pyleoclim

pyleoclim.core.psds.PSDPSD object

pyleoclim.core.psds.MultiplePSDMultiple PSD object

Examples

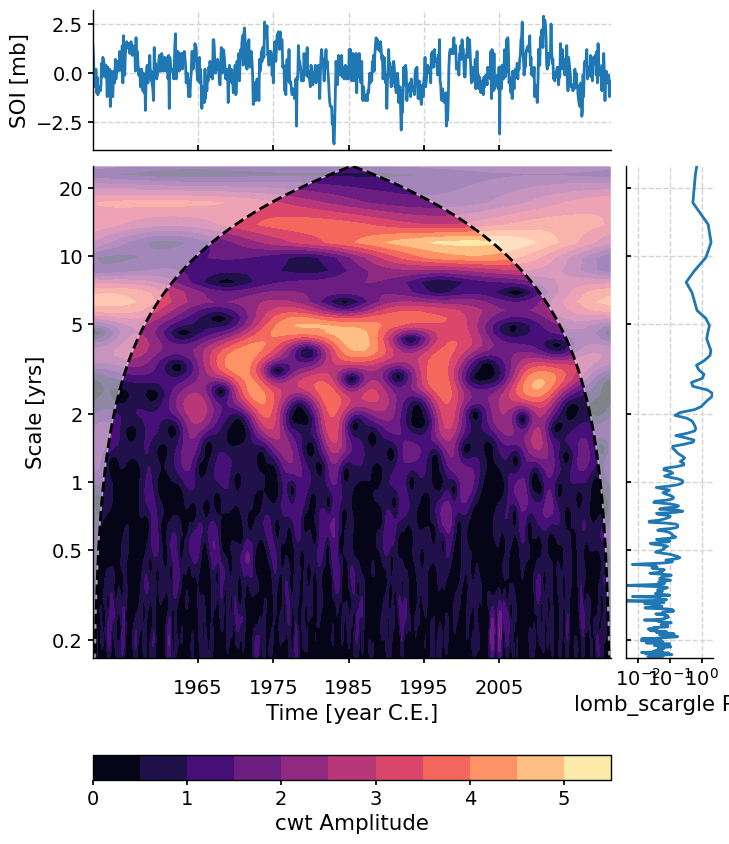

Summary_plot with pre-generated psd and scalogram objects. Note that if the scalogram contains saved noise realizations these will be flexibly reused. See pyleo.Scalogram.signif_test() for details

import pyleoclim as pyleo series = pyleo.utils.load_dataset('SOI') psd = series.spectral(freq_method = 'welch') scalogram = series.wavelet(freq_method = 'welch') fig, ax = series.summary_plot(psd = psd,scalogram = scalogram)

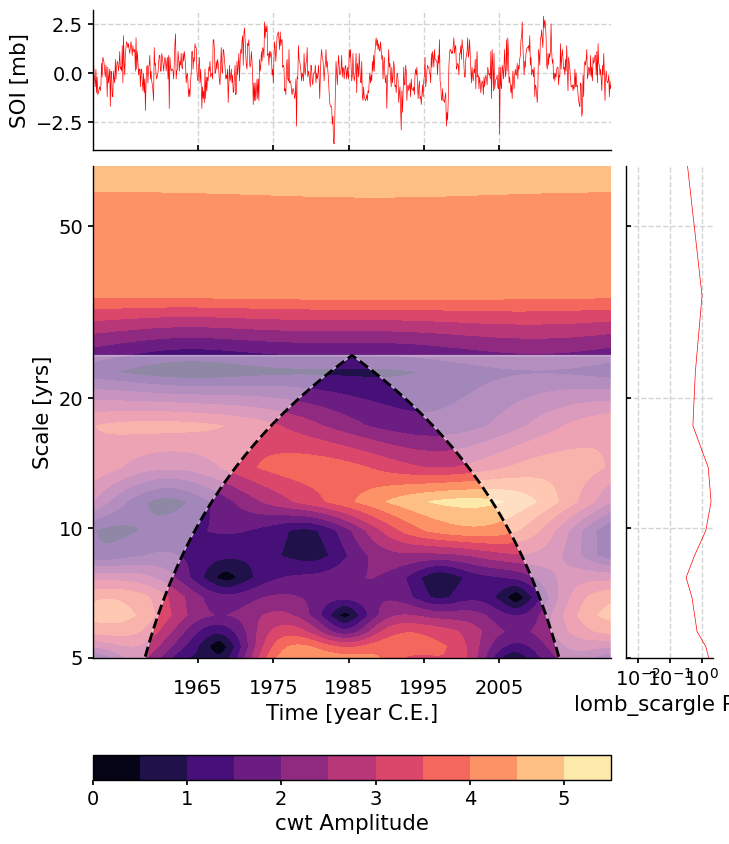

Summary_plot with pre-generated psd and scalogram objects from before and some plot modification arguments passed. Note that if the scalogram contains saved noise realizations these will be flexibly reused. See pyleo.Scalogram.signif_test() for details

import pyleoclim as pyleo series = pyleo.utils.load_dataset('SOI') psd = series.spectral(freq_method = 'welch') scalogram = series.wavelet(freq_method = 'welch') fig, ax = series.summary_plot(psd = psd,scalogram = scalogram, period_lim = [5,0], ts_plot_kwargs = {'color':'red','linewidth':.5}, psd_plot_kwargs = {'color':'red','linewidth':.5})

- surrogates(method='ar1sim', number=1, length=None, seed=None, settings=None)[source]

Generate surrogates of the Series object according to “method”

For now, assumes uniform spacing and increasing time axis

- Parameters:

method ({ar1sim, phaseran}) –

The method used to generate surrogates of the timeseries

Note that phaseran assumes an odd number of samples. If the series has even length, the last point is dropped to satisfy this requirement

number (int) – The number of surrogates to generate

length (int) – Length of the series

seed (int) – Control seed option for reproducibility

settings (dict) – Parameters for surrogate generator. See individual methods for details.

- Returns:

surr

- Return type:

See also

pyleoclim.utils.tsmodel.ar1_simAR(1) simulator

pyleoclim.utils.tsutils.phaseranphase randomization

- to_csv(metadata_header=True, path=None)[source]

Export Series to csv

- Parameters:

metadata_header (boolean, optional) – DESCRIPTION. The default is True.

path (str, optional) – system path to save the file. Default is ‘.’

- Return type:

None

See also

pyleoclim.Series.from_csvExamples

import pyleoclim as pyleo LR04 = pyleo.utils.load_dataset('LR04') LR04.to_csv() lr04 = pyleo.Series.from_csv('LR04_benthic_stack.csv') LR04.equals(lr04)

Series exported to LR04_benthic_stack.csv Time axis values sorted in ascending order

(True, True)

- to_json(path=None)[source]

Export the pyleoclim.Series object to a json file

- Parameters:

path (string, optional) – The path to the file. The default is None, resulting in a file saved in the current working directory using the label for the dataset as filename if available or ‘series.json’ if label is not provided.

- Return type:

None.

Examples

import pyleoclim as pyleo ts = pyleo.utils.load_dataset('SOI') ts.to_json('soi.json')

- to_pandas(paleo_style=False)[source]

Export to pandas Series

- Parameters:

paleo_style (boolean, optional) – If True, will replace datetime with time and label columns with units . The default is False.

- Returns:

ser

- Return type:

pd.Series representation of the pyleo.Series object

- view()[source]

Generates a DataFrame version of the Series object, suitable for viewing in a Jupyter Notebook

- Return type:

pd.DataFrame

Examples

Plot the HadCRUT5 Global Mean Surface Temperature

import pyleoclim as pyleo ts = pyleo.utils.load_dataset('HadCRUT5') ts.view()

GMST [$^{\circ}$C] Time [year C.E.] 1850.0 -0.417659 1851.0 -0.233350 1852.0 -0.229399 1853.0 -0.270354 1854.0 -0.291630 ... ... 2018.0 0.762654 2019.0 0.891073 2020.0 0.922794 2021.0 0.761856 2022.0 0.801242 173 rows × 1 columns

- wavelet(method='cwt', settings=None, freq_method='log', freq_kwargs=None, verbose=False)[source]

Perform wavelet analysis on a timeseries

- Parameters:

method (str {wwz, cwt}) –

- cwt - the continuous wavelet transform [1]

is appropriate for evenly-spaced series.

- wwz - the weighted wavelet Z-transform [2]

is appropriate for unevenly-spaced series.

Default is cwt, returning an error if the Series is unevenly-spaced.

freq_method (str) – {‘log’, ‘scale’, ‘nfft’, ‘lomb_scargle’, ‘welch’}

freq_kwargs (dict) – Arguments for the frequency vector

settings (dict) – Arguments for the specific wavelet method

verbose (bool) – If True, will print warning messages if there are any

- Returns:

scal

- Return type:

Scalogram object

See also

pyleoclim.utils.wavelet.wwzwwz function

pyleoclim.utils.wavelet.cwtcwt function

pyleoclim.utils.spectral.make_freq_vectorFunctions to create the frequency vector

pyleoclim.utils.tsutils.detrendDetrending function

pyleoclim.core.series.Series.spectralspectral analysis tools

pyleoclim.core.scalograms.ScalogramScalogram object

pyleoclim.core.scalograms.MultipleScalogramMultiple Scalogram object

References

[1] Torrence, C. and G. P. Compo, 1998: A Practical Guide to Wavelet Analysis. Bull. Amer. Meteor. Soc., 79, 61-78. Python routines available at http://paos.colorado.edu/research/wavelets/

[2] Foster, G., 1996: Wavelets for period analysis of unevenly sampled time series. The Astronomical Journal, 112, 1709.

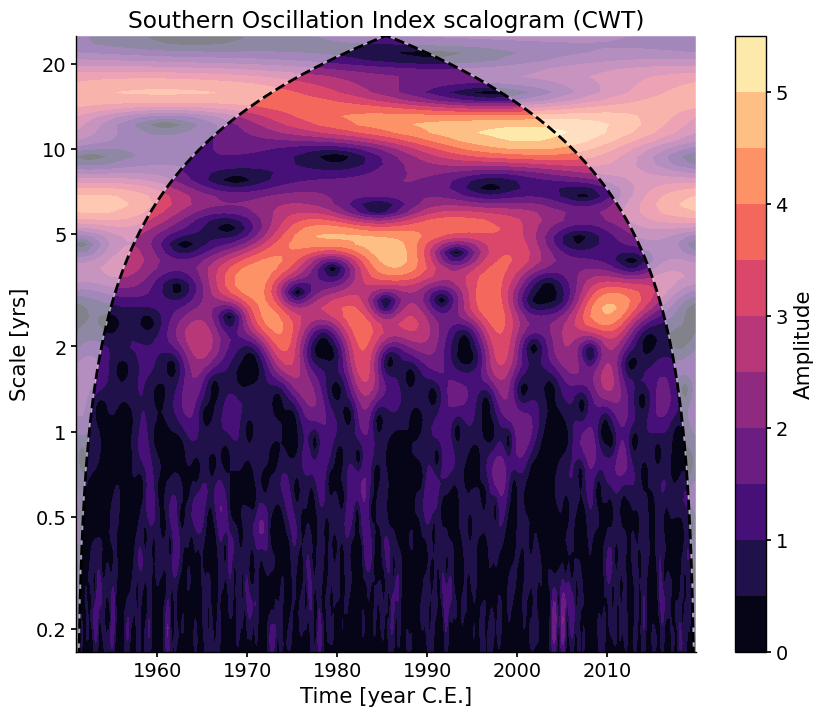

Examples

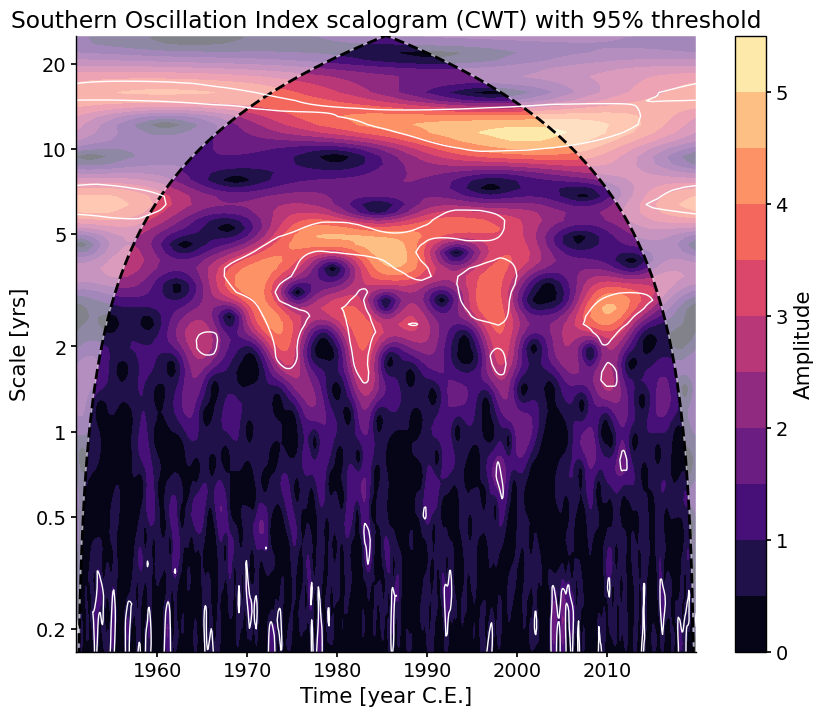

Wavelet analysis on the evenly-spaced SOI record. The CWT method will be applied by default.

import pyleoclim as pyleo ts = pyleo.utils.load_dataset('SOI') scal1 = ts.wavelet() scal_signif = scal1.signif_test(number=20) # for research-grade work, use number=200 or larger fig, ax = scal_signif.plot()

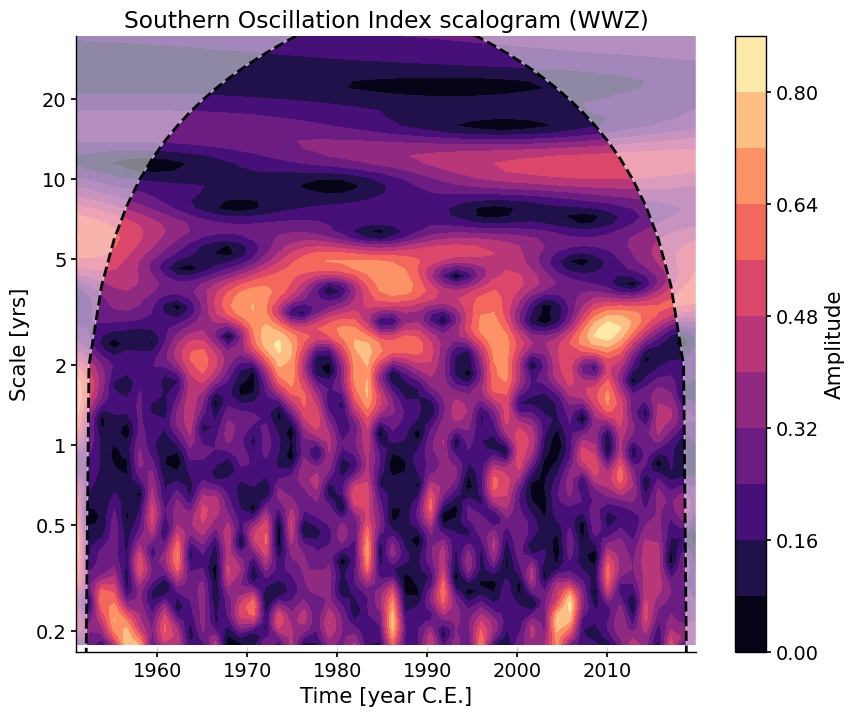

If you wanted to invoke the WWZ method instead (here with no significance testing, to lower computational cost):

scal2 = ts.wavelet(method='wwz') fig, ax = scal2.plot()

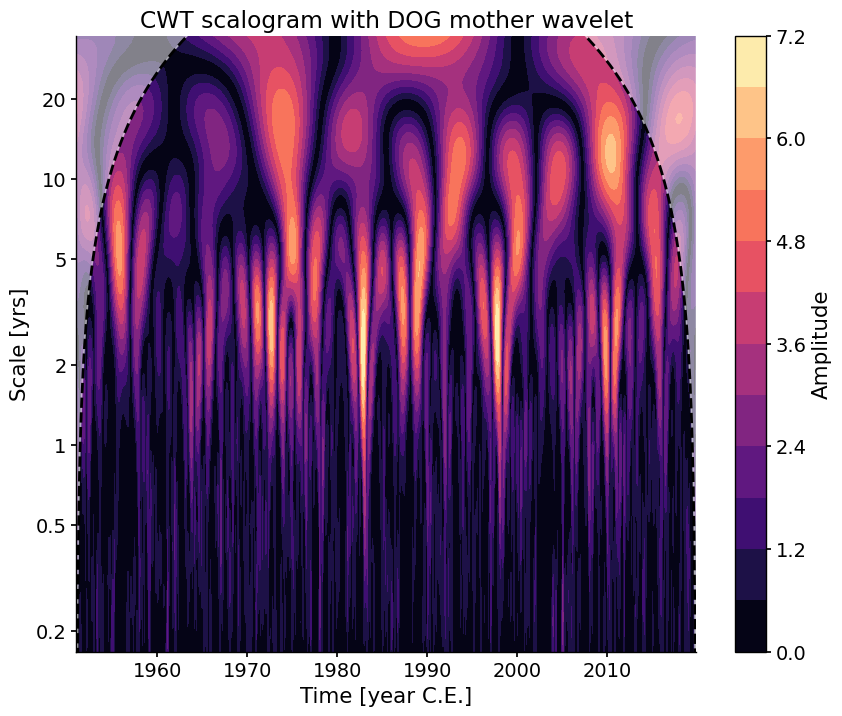

Notice that the two scalograms have different amplitudes, which are relative. Method-specific arguments may be passed via settings. For instance, if you wanted to change the default mother wavelet (‘MORLET’) to a derivative of a Gaussian (DOG), with degree 2 by default (“Mexican Hat wavelet”):

scal3 = ts.wavelet(settings = {'mother':'DOG'}) fig, ax = scal3.plot(title='CWT scalogram with DOG mother wavelet')

As for WWZ, note that, for computational efficiency, the time axis is coarse-grained by default to 50 time points, which explains in part the difference with the CWT scalogram.

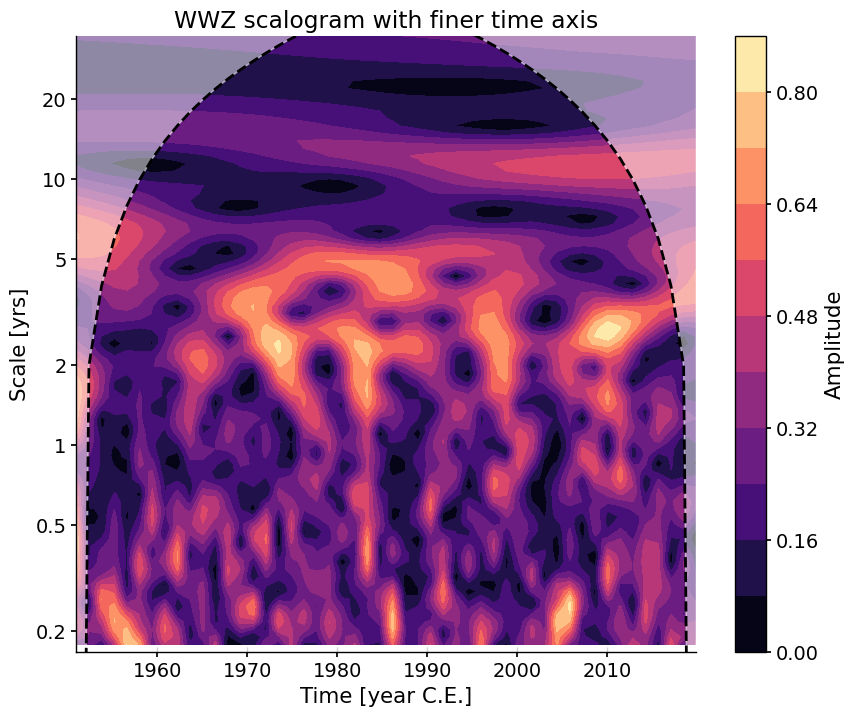

If you need a custom axis, it (and other method-specific parameters) can also be passed via the settings dictionary:

tau = np.linspace(np.min(ts.time), np.max(ts.time), 60) scal4 = ts.wavelet(method='wwz', settings={'tau':tau}) fig, ax = scal4.plot(title='WWZ scalogram with finer time axis')

- wavelet_coherence(target_series, method='cwt', settings=None, freq_method='log', freq_kwargs=None, verbose=False, common_time_kwargs=None)[source]

Performs wavelet coherence analysis with the target timeseries

- Parameters:

target_series (Series) – A pyleoclim Series object on which to perform the coherence analysis

method (str) – Possible methods {‘wwz’,’cwt’}. Default is ‘cwt’, which only works if the series share the same evenly-spaced time axis. ‘wwz’ is designed for unevenly-spaced data, but is far slower.

freq_method (str) – {‘log’,’scale’, ‘nfft’, ‘lomb_scargle’, ‘welch’}

freq_kwargs (dict) – Arguments for frequency vector

common_time_kwargs (dict) – Parameters for the method MultipleSeries.common_time(). Will use interpolation by default.

settings (dict) – Arguments for the specific wavelet method (e.g. decay constant for WWZ, mother wavelet for CWT) and common properties like standardize, detrend, gaussianize, pad, etc.

verbose (bool) – If True, will print warning messages, if any

- Returns:

coh

- Return type:

References

Grinsted, A., Moore, J. C. & Jevrejeva, S. Application of the cross wavelet transform and wavelet coherence to geophysical time series. Nonlin. Processes Geophys. 11, 561–566 (2004).

See also

pyleoclim.utils.spectral.make_freq_vectorFunctions to create the frequency vector

pyleoclim.utils.tsutils.detrendDetrending function

pyleoclim.core.multipleseries.MultipleSeries.common_timeput timeseries on common time axis

pyleoclim.core.series.Series.waveletwavelet analysis

pyleoclim.utils.wavelet.wwz_coherencecoherence using the wwz method

pyleoclim.utils.wavelet.cwt_coherencecoherence using the cwt method

Examples

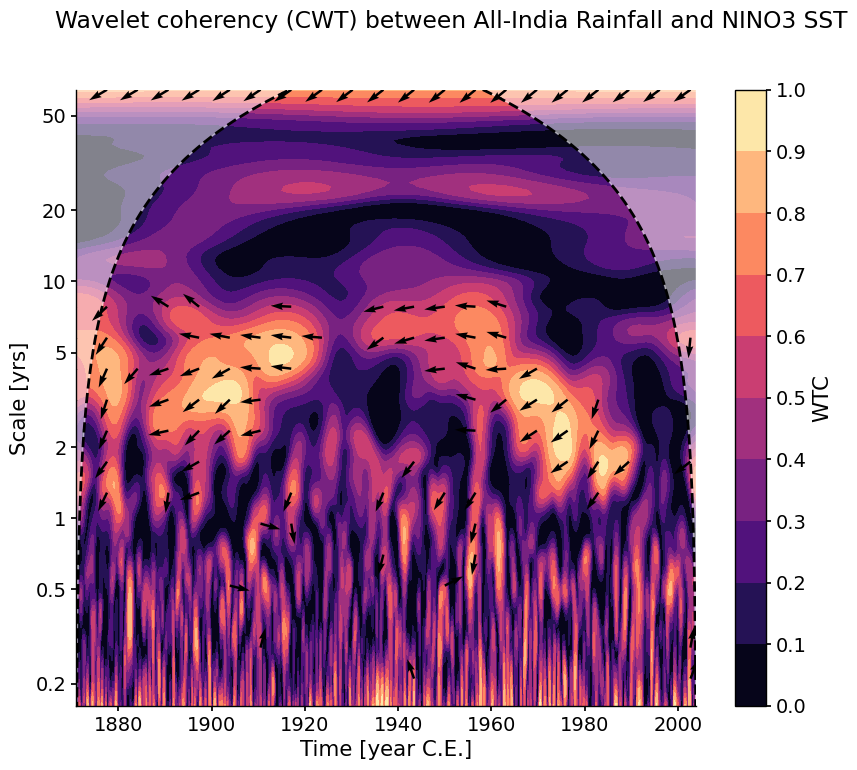

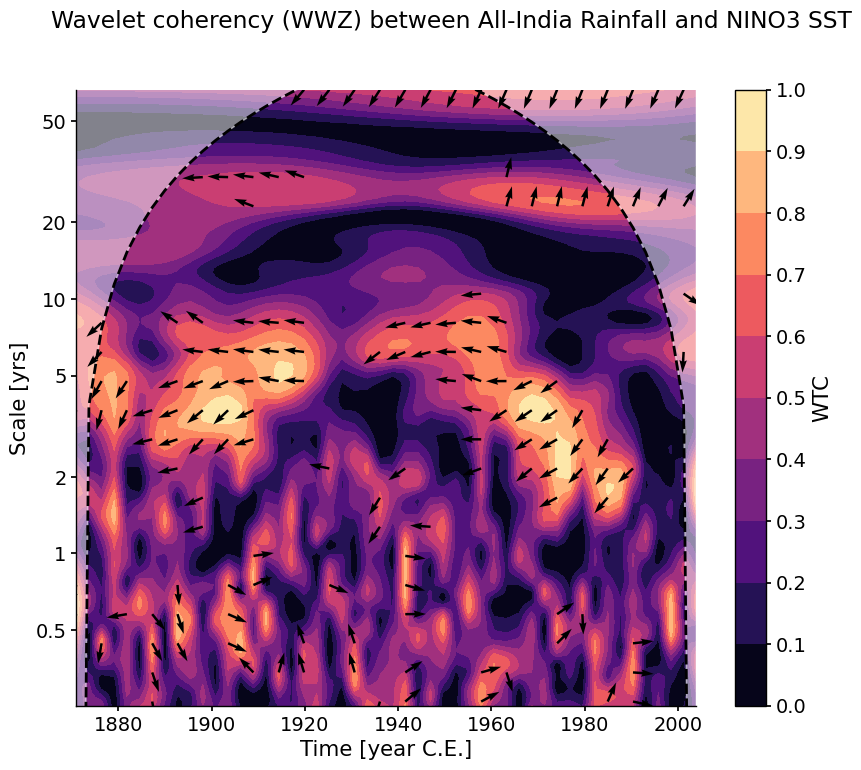

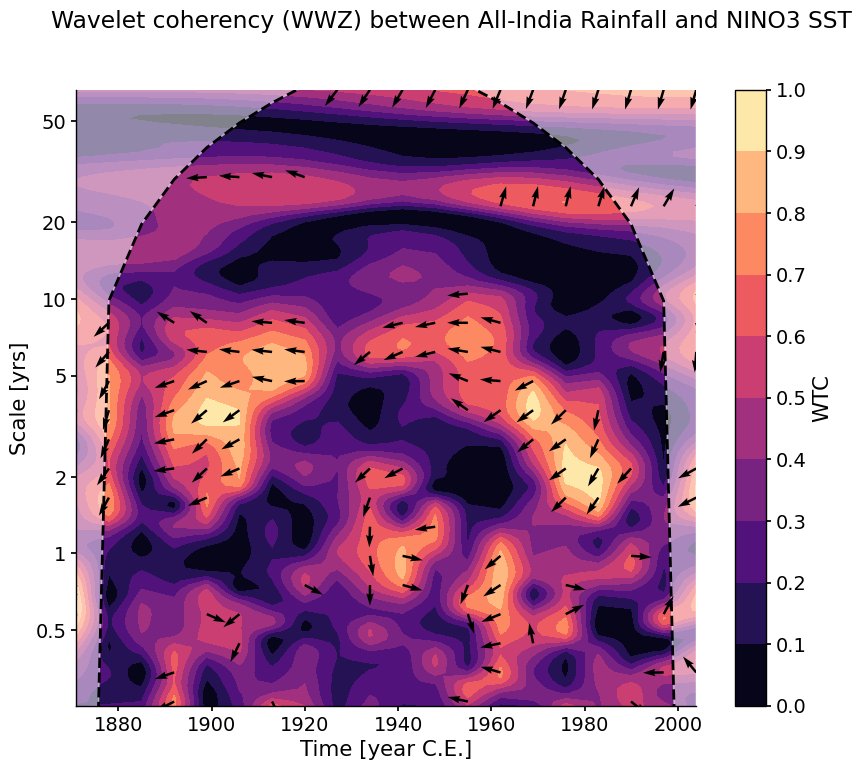

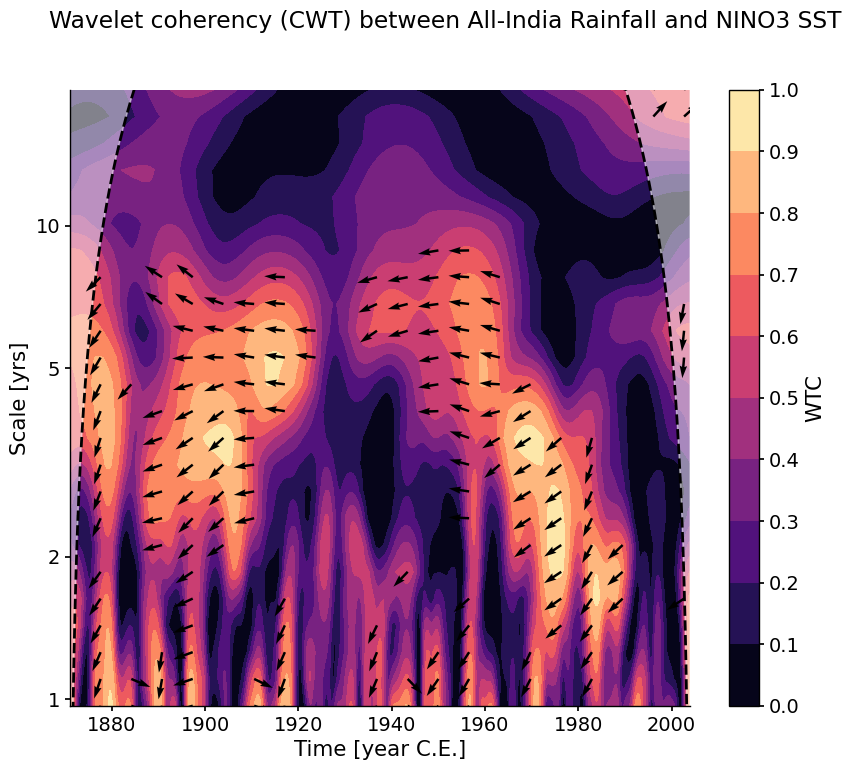

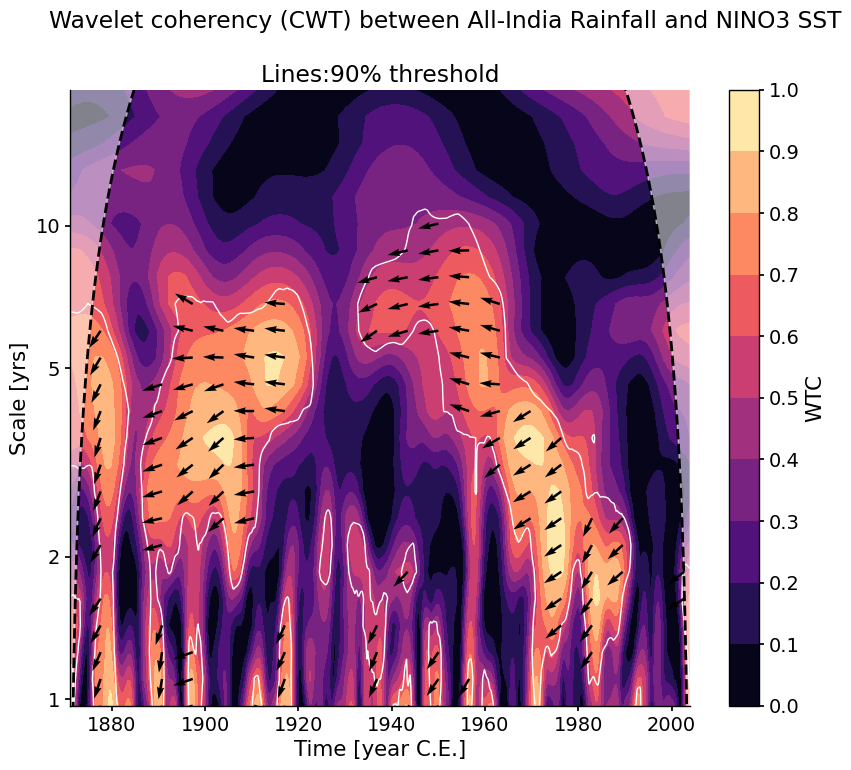

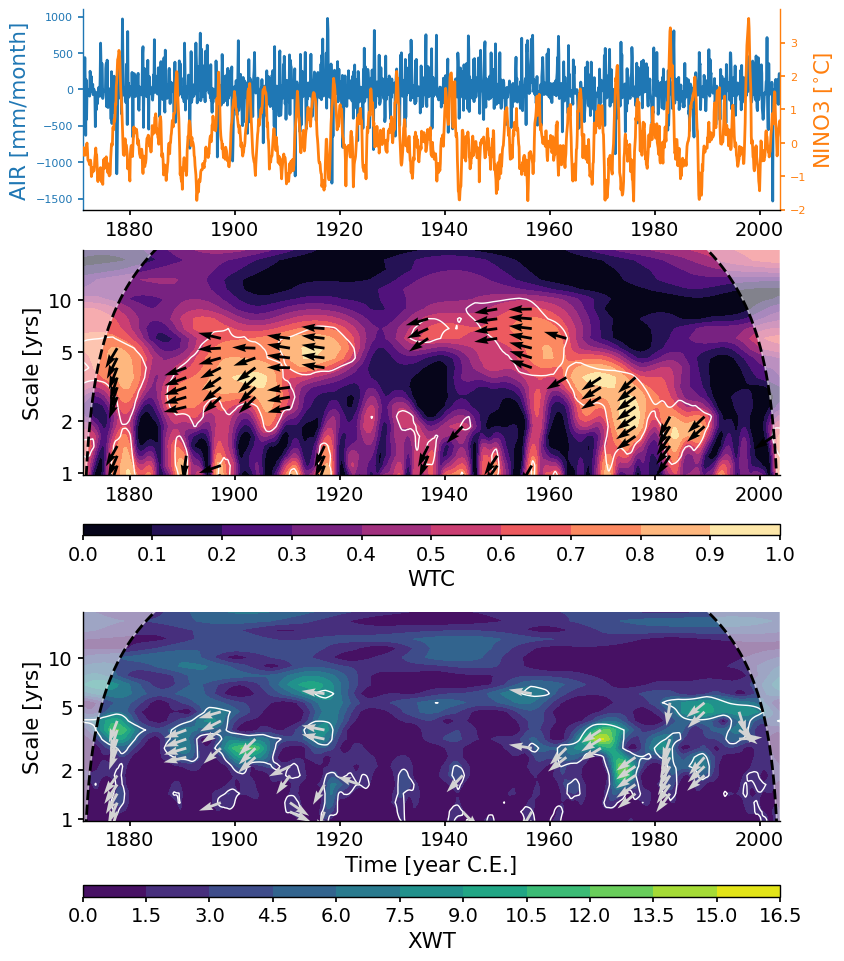

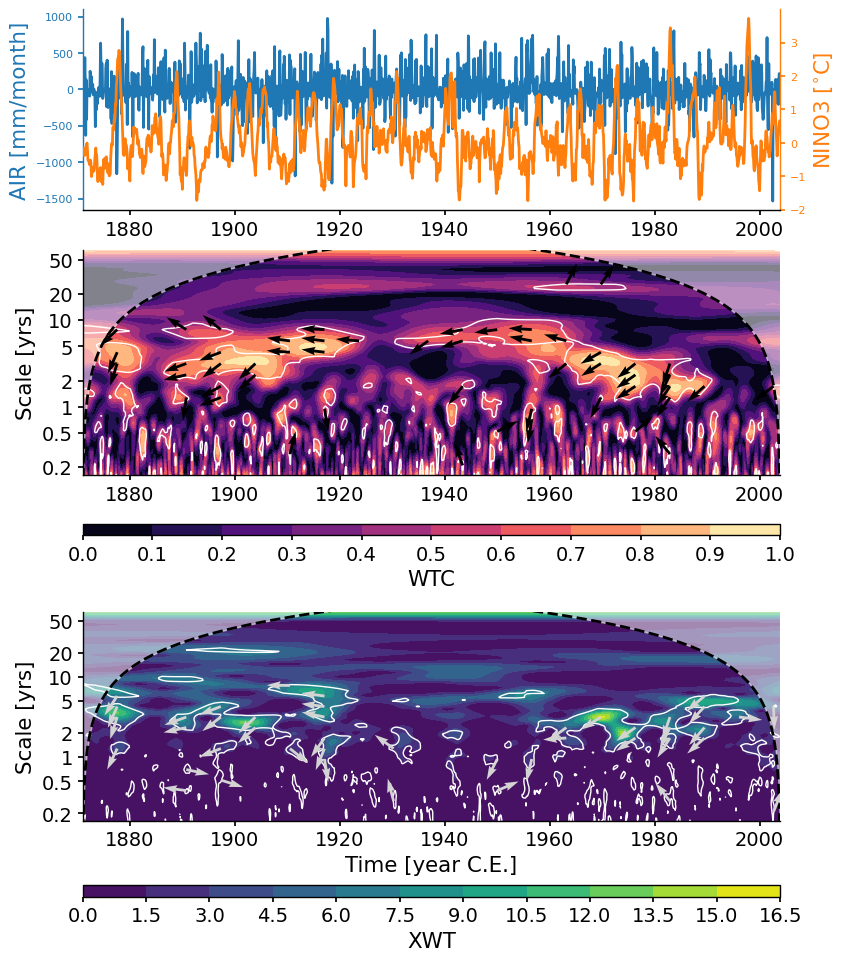

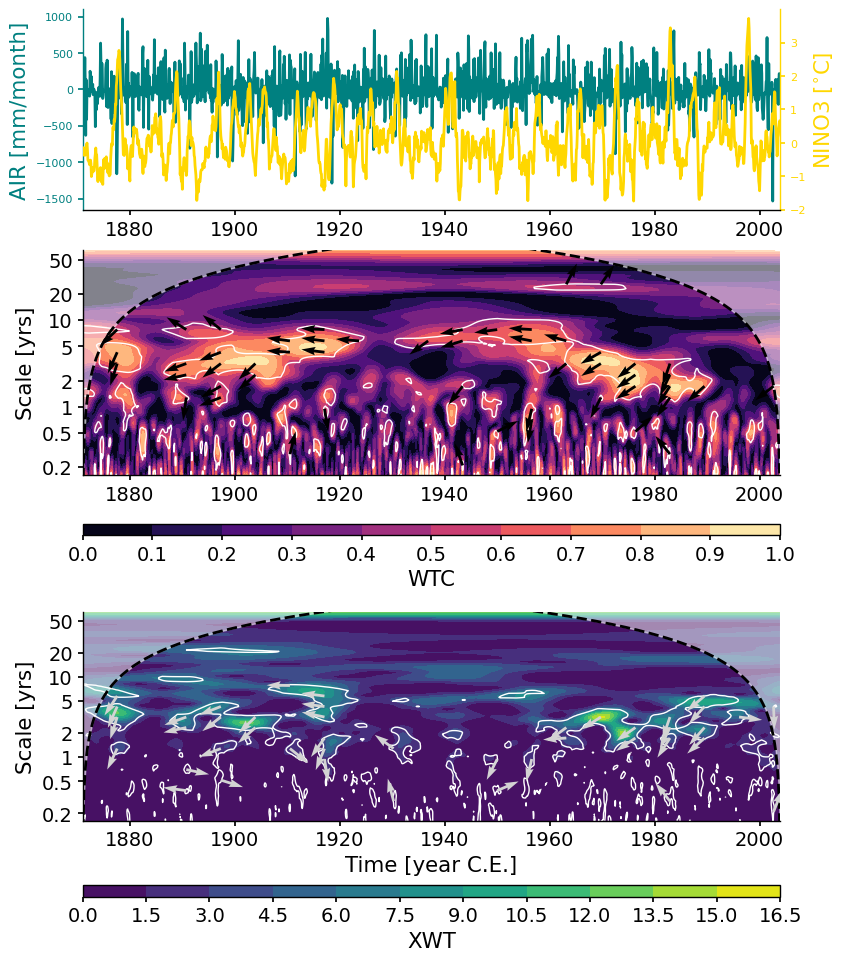

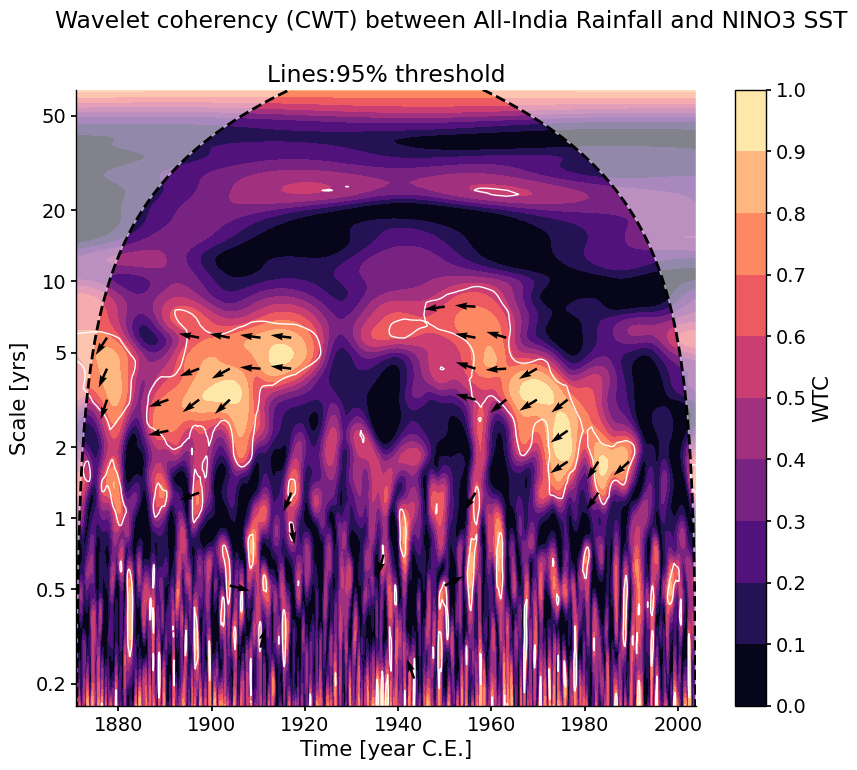

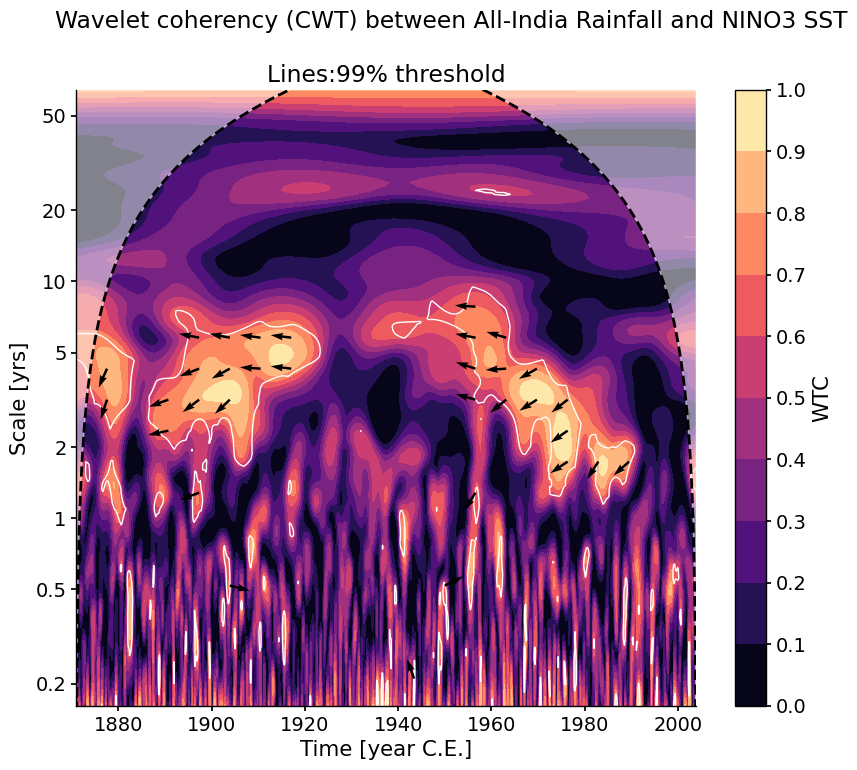

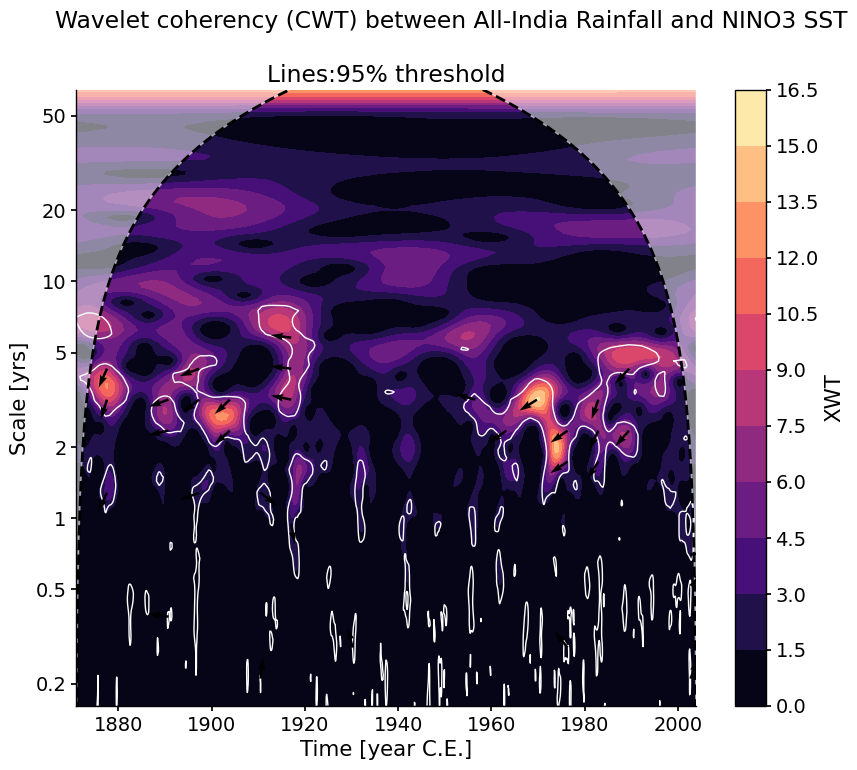

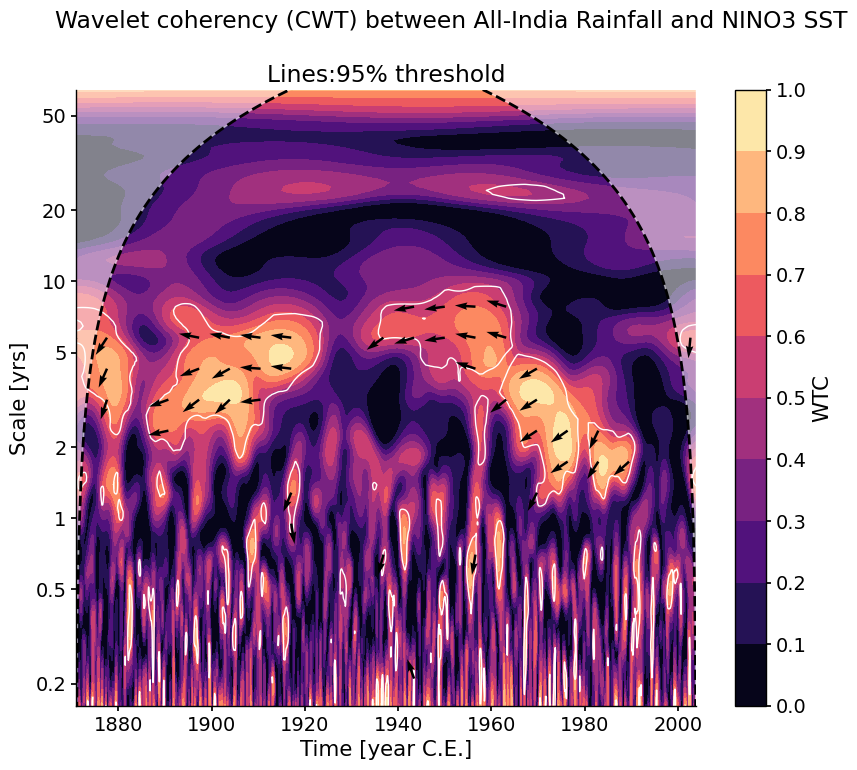

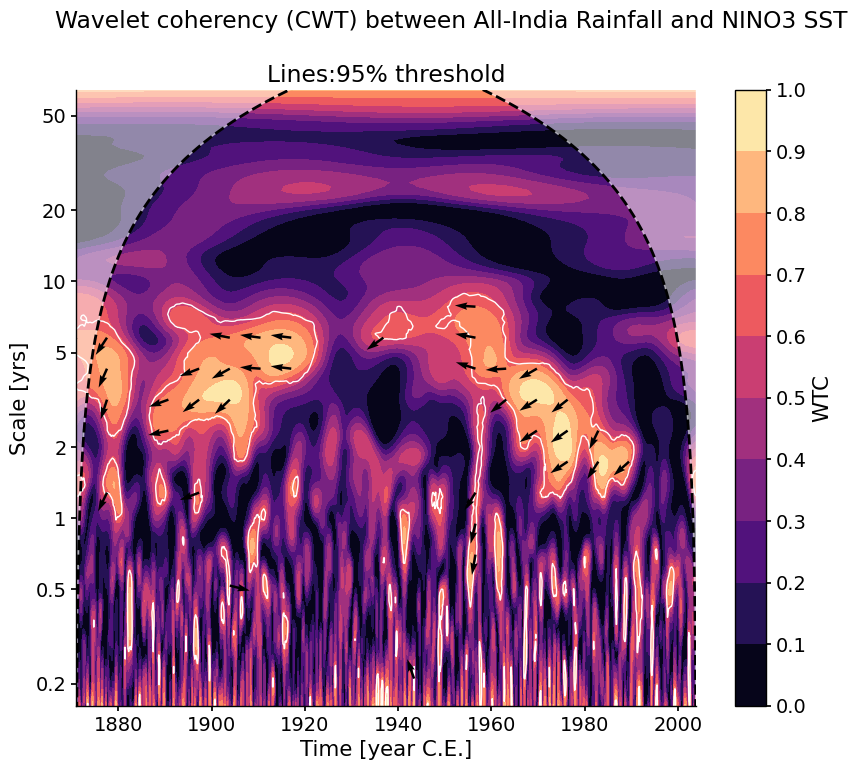

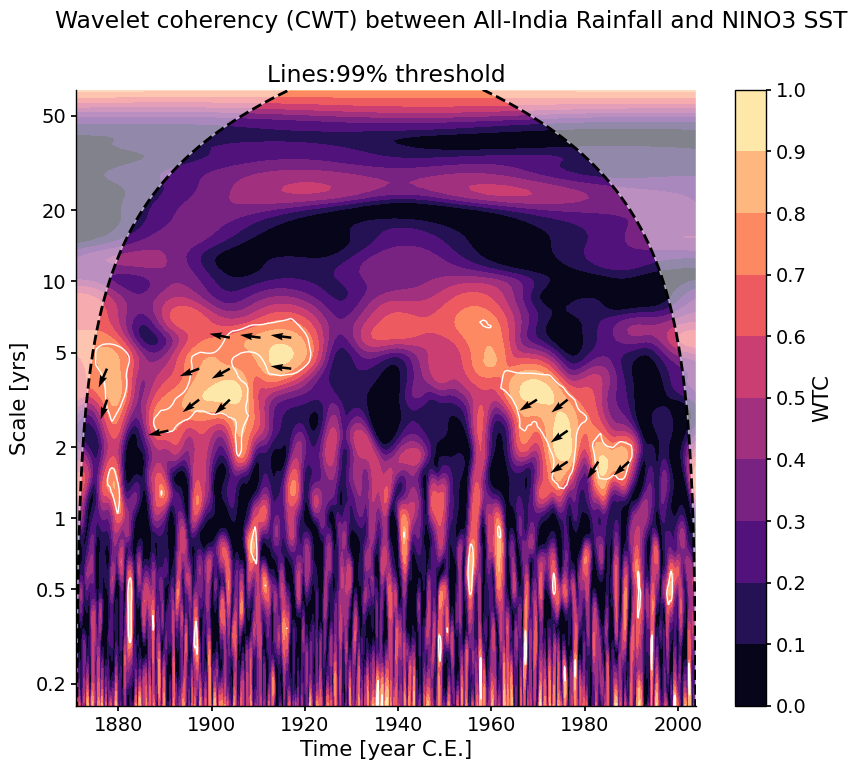

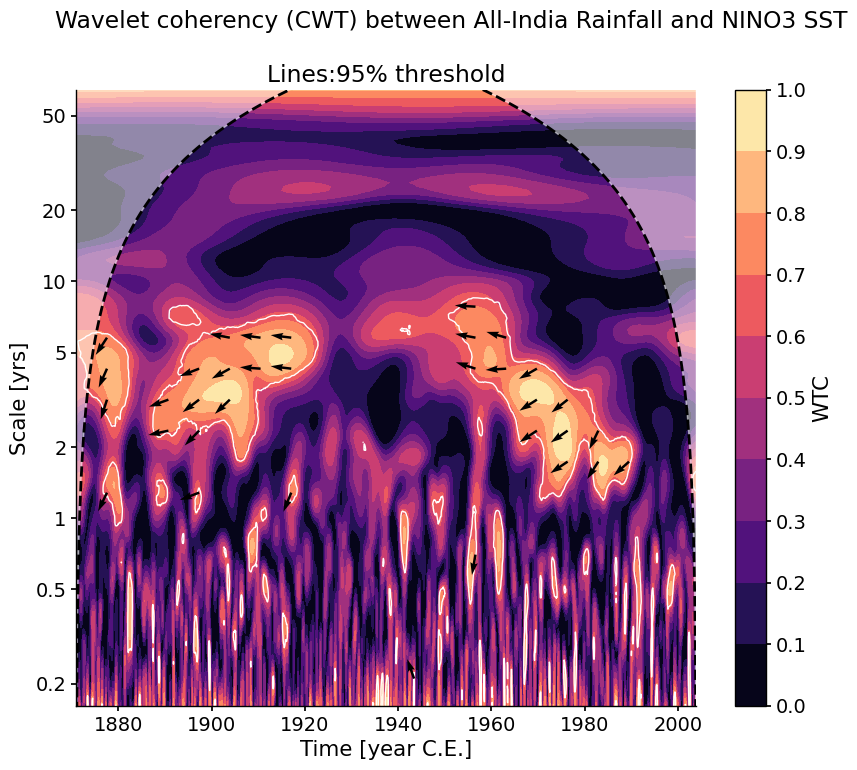

Calculate the wavelet coherence of NINO3 and All India Rainfall with default arguments:

import pyleoclim as pyleo ts_air = pyleo.utils.load_dataset('AIR') ts_nino = pyleo.utils.load_dataset('NINO3') coh = ts_air.wavelet_coherence(ts_nino) coh.plot()

(<Figure size 1000x800 with 2 Axes>, <Axes: xlabel='Time [year C.E.]', ylabel='Scale [yrs]'>)

Note that in this example both timeseries area already on a common, evenly-spaced time axis. If they are not (either because the data are unevenly spaced, or because the time axes are different in some other way), an error will be raised. To circumvent this error, you can either put the series on a common time axis (e.g. using common_time()) prior to applying CWT, or you can use the Weighted Wavelet Z-transform (WWZ) instead, as it is designed for unevenly-spaced data. However, it is usually far slower:

coh_wwz = ts_air.wavelet_coherence(ts_nino, method = 'wwz') coh_wwz.plot()

(<Figure size 1000x800 with 2 Axes>, <Axes: xlabel='Time [year C.E.]', ylabel='Scale [yrs]'>)

As with wavelet analysis, both CWT and WWZ admit optional arguments through settings. For instance, one can adjust the resolution of the time axis on which coherence is evaluated:

coh_wwz = ts_air.wavelet_coherence(ts_nino, method = 'wwz', settings = {'ntau':20}) coh_wwz.plot()

(<Figure size 1000x800 with 2 Axes>, <Axes: xlabel='Time [year C.E.]', ylabel='Scale [yrs]'>)

The frequency (scale) axis can also be customized, e.g. to focus on scales from 1 to 20y, with 24 scales:

coh = ts_air.wavelet_coherence(ts_nino, freq_kwargs={'fmin':1/20,'fmax':1,'nf':24}) coh.plot()

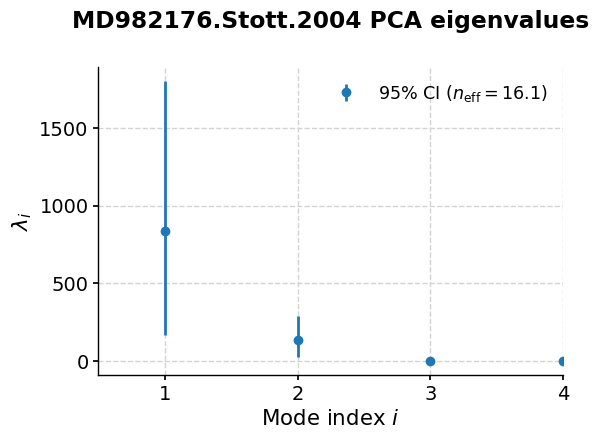

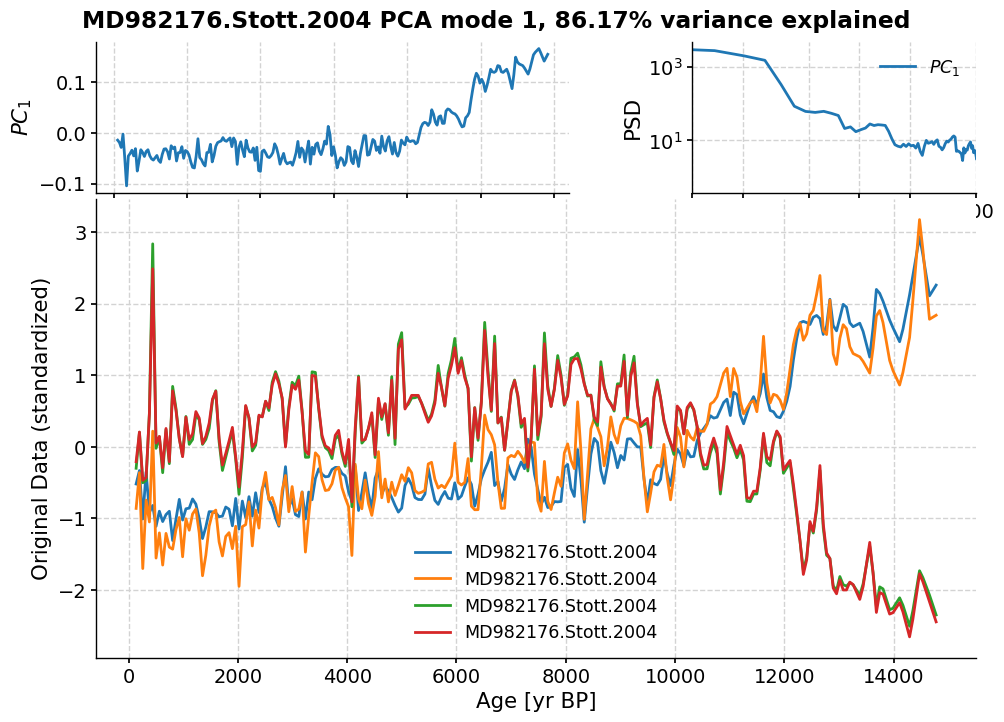

(<Figure size 1000x800 with 2 Axes>, <Axes: xlabel='Time [year C.E.]', ylabel='Scale [yrs]'>)